The proceed to patch/upgrade ZDLRA is not complicated, but as usual, some details need to be checked before starting the procedure. Since it is one engineering system based at Exadata, the procedure has one part that (maybe) needs to upgrade this stack too. But, is possible to upgrade just the recovery appliance library.

Whatever if need or no to upgrade the Exadata stack, the upgrade for recovery appliance library is the same. The commands and checks are the same. The procedure described in this post cover the upgrade of the recovery appliance library. For Exadata stack, it is in another post.

Where we are

Before even start the patch/upgrade it is important to know exactly which version you are running. To do this execute the command racli version at you database node:

[root@zeroinsg01 ~]# racli version

Recovery Appliance Version:

exadata image: 19.2.3.0.0.190621

rarpm version: ra_automation-12.2.1.1.2.201907-30111072.x86_64

rdbms version: RDBMS_12.2.0.1.0_LINUX.X64_RELEASE

transaction : kadjei_julpsu_ip2

zdlra version: ZDLRA_12.2.1.1.2.201907_LINUX.X64_RELEASE

[root@zeroinsg01 ~]#

With this, we can discover the ZDLRA version running (12.2.1.1.2.201907 in this case), and the Exadata image version (19.2.3.0.0.190621).

Supported versions

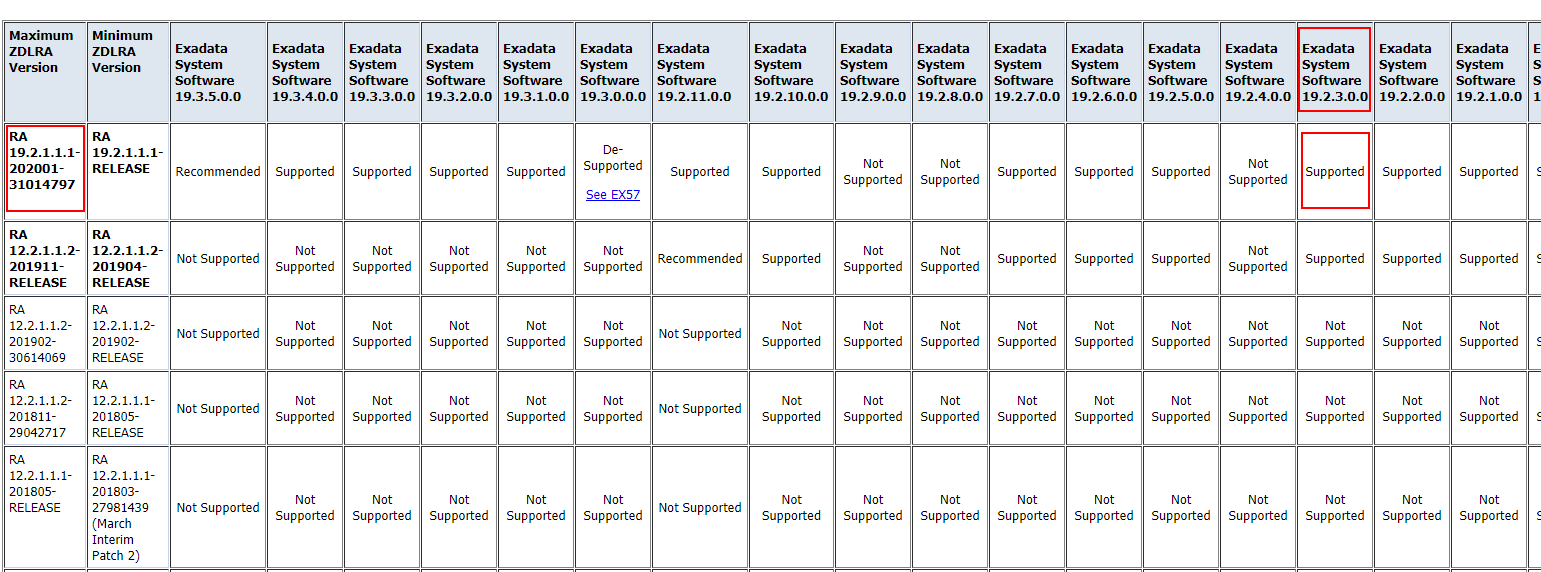

Whatever what it is needed to upgrade, the startup point it is the note 1927416.1 that cover the supported versions for ZDLRA. There it is possible to find all the supported versions for the recovery appliance library as well as the Exadata versions. Please, not upgrade the Exadata stack with a version that is not listed on this page.

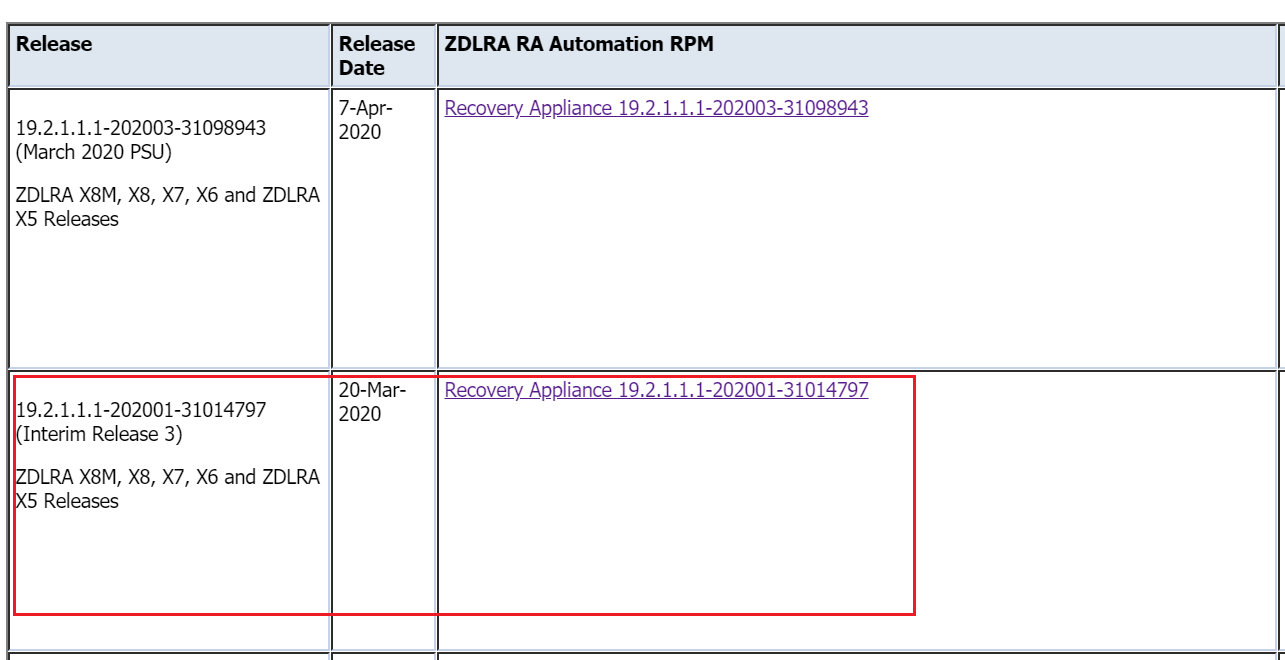

In the note, it is possible to choose what version is the target. Here in this post, my target version is 19.2.1.1.1-202001-31014797, patch 31014797 (Patch 31014797: ZDLRA 19.2.1.1.1.202001 (JAN) INTERIM PATCH 3). So, with this is possible to check what we can use and do. The first thing to check is the compatibility matrix between the ZDLRA version and the Exadata version.

Since we know the version that we are running at our Exadata image and the version that we want to go as ZDLRA, we can see if we are compatible.

Look the image above, it is marked the versions that are the target, and the Exadata that is actually running. And as you can see, this pair is supported, and we can continue. But before, some details.

It can occur that when you are jumping a lot of versions that you need to upgrade more than one version. Maybe it is possible that you need to first apply one intermediate version of Exadata Software or ZDLRA before you reach your desired version.

Another thing that you need to take care of is the switch version for your Exadata. Again, if you are jumping a lot of versions, maybe you need to upgrade the InfiniBand/RCoE more than one time, first to intermediate version and after to the desired one.

Who does what?

Different from Exadata Patch for Oracle Home and Grid Infrastructure (where you are responsible to apply the patches for them), it is ZDLRA itself that upgrades OH and GI. So, the patch/upgrade for ZDLRA includes the needed PSU’s for GI and OH.

But it is fundamental to check the readme of the ZDLRA release that will be applied. In this case, since the actual version for GI and OH is 12.2 and the target is 19c (for both), some details need to be checked because the requirements need to be ok.

In this case, the readme can be used as a guide, but also de default documentation for GI update and upgrade docs can be used too. The ZDLRA patch will fix/set some parameters for the operational system, but this does not cover all the corners.

It is important to say/write that if something is wrong with the system, it is a requirement to open SR to the ZDLRA team. ZDLRA is one engineering system more closed than Exadata, every change needs to be validated previously.

The ZDLRA patch will run the cluvfy for GI and this will point what needs to be fixed. One example of something that needs to fix previously is the HAIP. It needs to be removed. I wrote one post on how to do that, you can access it here.

Since this patch/upgrade will do the GI upgrade from 12.2 to 19c we can use the note 2542082.1 as a base to do a crosscheck if something is needed to be fixed when (or if) pointed by cluvfy. Some examples are hugepages that need to be increased, and NTP that need to clean some file that remains in the system.

The idea to do the crosscheck manually previously is avoiding errors during the racli upgrade. One example for HAIP:

[root@zeroinsg01 ~]# /opt/oracle.RecoveryAppliance/bin/racli upgrade appliance --step=2;

Created log /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log

Step [2 of 5]: <racli upgrade appliance>

Mon Dec 9 13:20:16 2019: Start: Upgrade Recovery Appliance - Step [PreCheck]

...

...

Mon Dec 9 13:40:32 2019: Failed: Cluster Pre Check -

Verifying Physical Memory

...

Verifying Kernel retpoline support ...PASSED

Pre-check for cluster services setup was unsuccessful.

Checks did not pass for the following nodes:

zeroinsg02,zeroinsg01

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Node Connectivity ...FAILED

zeroinsg02: PRVG-11068 : Highly Available IP (HAIP) is enabled on the nodes

"zeroinsg01,zeroinsg02".

zeroinsg01: PRVG-11068 : Highly Available IP (HAIP) is enabled on the nodes

"zeroinsg01,zeroinsg02".

Verifying RPM Package Manager database ...INFORMATION

PRVG-11250 : The check "RPM Package Manager database" was not performed because

it needs 'root' user privileges.

CVU operation performed: stage -pre crsinst

Date: Dec 9, 2019 1:38:46 PM

CVU home: /u01/app/19.0.0.0/grid/

User: oracle

Failed: 1 ()

at /u01/app/oracle/product/12.2.0.1/dbhome_1/perl/lib/site_perl/5.22.0/RA/Install.pm line 1417.

[root@zeroinsg01 ~]#

Patching/Upgrading

1 – Download the release

Depending on what you need or want to upgrade you need to download one or more patches. For this post, I will use just the recovery appliance rpm.

So, the first was to download the patch Recovery Appliance 19.2.1.1.1-202001-31014797:

This release contains all the PSU’s and patchsets needed for the upgrade (if needed). But one detail is needed to download base release (for GI and OH) if you are upgrading the version of ZDLRA.

Reading the readme of the Recovery Appliance patch we can see that it will upgrade the GI and OH to 19c. The patch for ZDLRA includes just the PSU’s, it does not contain the initial release of the GI 19c and OH 19c. You need to download it separately.

In this post, I will do that. So, it is needed to download the packages for the RDBMS Binary zip file (V982063-01.zip) and Grid Binary zip file (V982068-01.zip) from https://edelivery.oracle.com. If you do not download it, you will receive an error when trying to do the patch.

2- Where put the files

All the files for ZDLRA needs to be stored at /radump at the database server node 01. It is fixed in the procedure and it is a requirement.

As best practices, I recommend that before copy the new files, remove all older patches from /radump in both nodes. And this include files that are inside the ZDLRA patch (like ra_init_param_check.pl, load_init_param.sh, load_init_param.pl, dbmsrsadm.sql, dbmsrsadmpreq.sql, prvtrsadm.sql, ra_preinstall.pl).

So, I copied the files from my NFS to /radump:

[oracle@zeroinsg01 ~]$ cd /radump/ [oracle@zeroinsg01 radump]$ [oracle@zeroinsg01 radump]# cp /tmp/zfs/EXADATA_PATCHING/19c/Exadata-Patch/19c-Grid/V982068-01.zip /radump/ [oracle@zeroinsg01 radump]# cp /tmp/zfs/EXADATA_PATCHING/19c/Exadata-Patch/19c-OH/V982063-01.zip /radump/ [oracle@zeroinsg01 radump]$ cp /tmp/zfs/ZDLRA_PATCHING/19.2.1.1.1-202001-31014797/p31014797_192111_Linux-x86-64.zip /radump/ [oracle@zeroinsg01 radump]$

Files copied:

- GI Binary installation.

- OH Binary installation.

- ZDLRA RPM.

3 – Unzip

After copy to /radump, it is necessary to unzip the ZDLRA release. Only this needs to be unzipped.

[oracle@zeroinsg01 radump]$ unzip p31014797_192111_Linux-x86-64.zip Archive: p31014797_192111_Linux-x86-64.zip inflating: set_env.sh extracting: p6880880_180000_Linux-x86-64.zip inflating: dbmsrsadmpreq.sql inflating: dbmsrsadm.sql inflating: prvtrsadm.sql inflating: create_raoratab.pl inflating: create_raoratab.sh inflating: get_versions.pl inflating: get_versions.sh replace ra_init_param_check.pl? [y]es, [n]o, [A]ll, [N]one, [r]ename: A inflating: ra_init_param_check.pl inflating: ra_init_param_check.sh inflating: ra_precheck.pl inflating: ra_precheck.sh inflating: ra_preinstall.pl inflating: ra_automation-19.2.1.1.1.202001-31014797.x86_64.rpm inflating: run_set_env.sh extracting: p28279612_190000_Linux-x86-64.zip extracting: p29232533_190000_Linux-x86-64.zip extracting: p29708769_190000_Linux-x86-64.zip extracting: p29908639_190000_Linux-x86-64.zip extracting: p30143796_190000_Linux-x86-64.zip extracting: p30143796_194000DBRU_Linux-x86-64.zip extracting: p30312546_190000_Linux-x86-64.zip inflating: README.txt [oracle@zeroinsg01 radump]$ logout [root@zeroinsg01 ~]#

As you can see, the patch contains all PSU’s for GI and DB.

4 – RA_PREINSTALL

The next step is executed as root the preinstall in the first node only. This step copy the ZDLRA binaries files to /opt/oracle.RecoveryAppliance/ folder (in both nodes).

Besides that, it removes the old RPM library and install the new version and prepare the system to the new version. The changes usually are small in this phase (like crontab, oratab, and environment variables).

To do the preinstall you need to execute the command “perl ra_preinstall.pl” as root, and inside /radump folder.

[root@zeroinsg01 ~]# cd /radump/

[root@zeroinsg01 radump]#

[root@zeroinsg01 radump]# perl ra_preinstall.pl

Start: Running ra_preinstall.pl on zeroinsg01.

NOTE:

Current deployed RPM [ra_automation-12.2.1.1.2.201907-30111072.x86_64.rpm] not found!

If you continue without an old RPM, rollback will not be possible.

Refer to the README.txt included in this ZDLRA Patch for more details.

Do you want to continue? (y|n): y

Note:

The ra_preinstall.pl manages the ra_automation RPM,

and provides a --rollback option.

The RPM is updated during ra preinstall. Rollback is feasible if the old rpm is found.

You do not need to update RPM separately.

Refer to the README.txt included in this ZDLRA Patch for more details.

Do you want to continue (y|n): y

Deployed RPM: ZDLRA_12.2.1.1.2.201907_LINUX.X64_RELEASE

Installed RPM: ZDLRA_12.2.1.1.2.201907_LINUX.X64_RELEASE

RPM matches.

Fuse group already exists. Skipping.

Start Update sshd_config

End Update sshd_config

End: Running ra_preinstall.pl on zeroinsg01.

Copying ra_preinstall.pl, create_raoratab.* and new RPM to remote node zeroinsg02. Password may be required to connect.

ra_preinstall.pl 100% 42KB 49.6MB/s 00:00

create_raoratab.pl 100% 3251 6.9MB/s 00:00

create_raoratab.sh 100% 991 3.2MB/s 00:00

run_set_env.sh 100% 1000 3.6MB/s 00:00

set_env.sh 100% 2420 9.0MB/s 00:00

ra_automation-19.2.1.1.1.202001-31014797.x86_64.rpm 100% 668MB 315.8MB/s 00:02

Created log /opt/oracle.RecoveryAppliance/log/create_raoratab.log

Start: Running ra_preinstall.pl on zeroinsg02.

Fuse group already exists. Skipping.

Start Update sshd_config

End Update sshd_config

End: Running ra_preinstall.pl on zeroinsg02.

Start Restart sshd

End Restart sshd

Start: Check Init Parameters

Created log file /opt/oracle.RecoveryAppliance/log/ra_init_param_check.log

All init parameters have been validated.

End: Check Init Parameters

Start: Remove current RPM and install new RPM on zeroinsg01.

End: Remove current RPM and install new RPM on zeroinsg01.

Start: Remove current RPM and install new RPM on zeroinsg02.

End: Remove current RPM and install new RPM on zeroinsg02.

Created log /opt/oracle.RecoveryAppliance/log/raprecheck.log

Start: Run Pre check for Upgrade

Fri Apr 3 14:34:57 2020: Start: Check ZDLRA Services

Fri Apr 3 14:34:59 2020: End: Check ZDLRA Services

Fri Apr 3 14:34:59 2020: Start: Check ASM rebalance

Fri Apr 3 14:34:59 2020: End: Check ASM rebalance

Fri Apr 3 14:34:59 2020: Start: Check Cluster

Fri Apr 3 14:36:07 2020: End: Check Cluster

Fri Apr 3 14:36:13 2020: Start: Check Open Incidents

Fri Apr 3 14:36:13 2020: End: Check Open Incidents

Fri Apr 3 14:36:13 2020: Start: Check Invalid Objects

Fri Apr 3 14:36:15 2020: End: Check Invalid Objects

Fri Apr 3 14:36:15 2020: Start: Check Init Parameters

Fri Apr 3 14:36:16 2020: End: Check Init Parameters

Fri Apr 3 14:36:16 2020: Start: Check compute node oracle access

Fri Apr 3 14:36:16 2020: End: Check compute node oracle access

End: Run Pre check for Upgrade

Start Restart sshd

!!!NOTE!!!

Exit and log back into [zeroinsg01] prior to continuing

!!!NOTE!!!

End Restart sshd

[root@zeroinsg01 radump]#

The ra_preinstall.pl resides in /radump file.

Crucial, after the successful execution, exit from the current session and open a new one. This is important because the execution changes some environment variables and is needs to continue the next steps with a clean environment.

Be careful that it is not recommended to restart this ZDLRA after this moment. If you think about it, the binaries were changed in some folder, and the libraries/RPM too. But, the database itself was not upgraded. Of course, that library supposes to be/have interoperability, but if it is possible to avoid the problem, it is desired.

5 – Cleaning incidents

The upgrade process of ZDLRA will check the internal tables to verify open incidents (rasys.ra_incident_log) and will stop if you have some. It is possible to use the ignore flag, but you can clean it.

To do that, it is used the procedure rdbms_ra.reset_error:

SQL> set linesize 250

SQL> col FIRST_SEEN format a35

SQL> col LAST_SEEN format a35

SQL> select db_key, db_unique_name, incident_id, task_id, component, status, first_seen, last_seen from ra_incident_log where status = 'ACTIVE' order by last_seen;

DB_KEY DB_UNIQUE_NAME INCIDENT_ID TASK_ID COMPONENT STATUS FIRST_SEEN LAST_SEEN

---------- ---------------- ----------- ---------- ------------------------------ ------ ----------------------------------- -----------------------------------

35221889 ORADBOR1 62631581 62631963 PURGE ACTIVE 29-FEB-20 05.02.18.337256 AM +01:00 29-FEB-20 05.02.19.496775 AM +01:00

17013626 ORADBOR2 63263667 63263348 PURGE ACTIVE 13-MAR-20 08.29.30.942252 AM +01:00 13-MAR-20 08.29.31.454871 AM +01:00

16103868 ORADBOR3 61956897 65037370 RESTORE_RANGE_REFRESH ACTIVE 13-FEB-20 10.32.43.517689 AM +01:00 03-APR-20 02.06.50.383659 PM +02:00

16664140 ORADBOR4 64982290 65037380 RESTORE_RANGE_REFRESH ACTIVE 02-APR-20 12.23.54.004022 PM +02:00 03-APR-20 02.06.50.981807 PM +02:00

35222117 ORADBOR5 59763932 65037420 RESTORE_RANGE_REFRESH ACTIVE 09-DEC-19 12.38.48.811749 PM +01:00 03-APR-20 02.06.55.401646 PM +02:00

23187808 ORADBOR6 61488051 65037453 RESTORE_RANGE_REFRESH ACTIVE 31-JAN-20 10.39.56.704797 AM +01:00 03-APR-20 02.07.00.579596 PM +02:00

39315676 ORADBOR7 61461052 65037444 RESTORE_RANGE_REFRESH ACTIVE 30-JAN-20 06.26.52.256764 PM +01:00 03-APR-20 02.07.01.429492 PM +02:00

39332095 ORADBOR8 51624234 65037439 RESTORE_RANGE_REFRESH ACTIVE 20-MAY-19 05.48.12.051325 PM +02:00 03-APR-20 02.07.04.982031 PM +02:00

8 rows selected.

SQL>

SQL> begin

2 dbms_ra.reset_error(62631581);

3 dbms_ra.reset_error(63263667);

4 dbms_ra.reset_error(61956897);

5 dbms_ra.reset_error(64982290);

6 dbms_ra.reset_error(59763932);

7 dbms_ra.reset_error(61488051);

8 dbms_ra.reset_error(61461052);

9 dbms_ra.reset_error(51624234);

10 end;

11 /

PL/SQL procedure successfully completed.

SQL>

As you can see, the procedure received as parameter the value from column INCIDENT_ID. And I picked up all the incidents that are with status ACTIVE.

6 – Upgrade appliance, step=1

The next step is the upgrade itself. It is not the case that previously changed nothing, but here the installation checks for node access, the path for installation folders, and the init parameter of the database. The other checks involve ASM if all disks are online aa an example.

[root@zeroinsg01 ~]# /opt/oracle.RecoveryAppliance/bin/racli upgrade appliance --step=1 Created log /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log Step [1 of 5]: <racli upgrade appliance> Fri Apr 3 20:50:21 2020: Start: Upgrade Recovery Appliance - Step [PreCheck] Fri Apr 3 20:50:22 2020: Start: Check ZDLRA Services Fri Apr 3 20:50:24 2020: End: Check ZDLRA Services Fri Apr 3 20:50:24 2020: Start: Check ASM rebalance Fri Apr 3 20:50:24 2020: End: Check ASM rebalance Fri Apr 3 20:50:24 2020: Start: Check Cluster Fri Apr 3 20:51:28 2020: End: Check Cluster Fri Apr 3 20:51:32 2020: Start: Check Open Incidents Fri Apr 3 20:51:32 2020: End: Check Open Incidents Fri Apr 3 20:51:32 2020: Start: Check Invalid Objects Fri Apr 3 20:51:34 2020: End: Check Invalid Objects Fri Apr 3 20:51:34 2020: Start: Check Init Parameters Fri Apr 3 20:51:34 2020: End: Check Init Parameters Fri Apr 3 20:51:35 2020: Start: Check compute node oracle access Fri Apr 3 20:51:35 2020: End: Check compute node oracle access Fri Apr 3 20:51:35 2020: Start: Check Patch Oracle Grid Fri Apr 3 20:51:41 2020: End: Check Patch Oracle Grid Fri Apr 3 20:51:41 2020: Start: Check Patch Oracle Database Fri Apr 3 20:51:47 2020: End: Check Patch Oracle Database Fri Apr 3 20:51:47 2020: End: Upgrade Recovery Appliance - Step [PreCheck] Next: <racli upgrade appliance --step=2> [root@zeroinsg01 ~]#

7 – Upgrade appliance, step=2

The next is step=2 for “upgrade appliance”. In this step, the process creates the folders for the new GI and OH (if need, like upgrade 12.2 to 19c), and also do the precheck for GI and OH.

[root@zeroinsg01 ~]# /opt/oracle.RecoveryAppliance/bin/racli upgrade appliance --step=2 Created log /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log Step [2 of 5]: <racli upgrade appliance> Mon Apr 6 13:22:45 2020: Start: Upgrade Recovery Appliance - Step [PreCheck] Mon Apr 6 13:22:46 2020: Start: Check ZDLRA Services Mon Apr 6 13:22:48 2020: End: Check ZDLRA Services Mon Apr 6 13:22:48 2020: Start: Check ASM rebalance Mon Apr 6 13:22:48 2020: End: Check ASM rebalance Mon Apr 6 13:22:48 2020: Start: Check Cluster Mon Apr 6 13:23:56 2020: End: Check Cluster Mon Apr 6 13:24:01 2020: Start: Check Open Incidents Mon Apr 6 13:24:01 2020: End: Check Open Incidents Mon Apr 6 13:24:01 2020: Start: Check Invalid Objects Mon Apr 6 13:24:04 2020: End: Check Invalid Objects Mon Apr 6 13:24:04 2020: Start: Check Init Parameters Mon Apr 6 13:24:04 2020: End: Check Init Parameters Mon Apr 6 13:24:04 2020: Start: Check compute node oracle access Mon Apr 6 13:24:05 2020: End: Check compute node oracle access Mon Apr 6 13:24:05 2020: Start: Check Patch Oracle Grid Mon Apr 6 13:24:10 2020: End: Check Patch Oracle Grid Mon Apr 6 13:24:10 2020: Start: Check Patch Oracle Database Mon Apr 6 13:24:16 2020: End: Check Patch Oracle Database Mon Apr 6 13:24:17 2020: End: Upgrade Recovery Appliance - Step [PreCheck] Mon Apr 6 13:24:17 2020: Start: Upgrade Recovery Appliance - Step [Stage] Mon Apr 6 13:25:09 2020: Unpack: DB Software - Started Mon Apr 6 13:26:04 2020: Unpack: DB Software - Complete Mon Apr 6 13:26:05 2020: Start: Unpack Patches Mon Apr 6 13:27:41 2020: Start: Apply 29850993 to /u01/app/19.0.0.0/grid Mon Apr 6 13:29:13 2020: End: Apply 29850993 to /u01/app/19.0.0.0/grid Mon Apr 6 13:29:13 2020: Start: Apply 29851014 to /u01/app/19.0.0.0/grid Mon Apr 6 13:30:29 2020: End: Apply 29851014 to /u01/app/19.0.0.0/grid Mon Apr 6 13:30:29 2020: Start: Apply 29834717 to /u01/app/19.0.0.0/grid Mon Apr 6 13:32:42 2020: End: Apply 29834717 to /u01/app/19.0.0.0/grid Mon Apr 6 13:32:42 2020: Start: Apply 29401763 to /u01/app/19.0.0.0/grid Mon Apr 6 13:33:34 2020: End: Apply 29401763 to /u01/app/19.0.0.0/grid Mon Apr 6 13:33:34 2020: Start: Apply 30143796 to /u01/app/19.0.0.0/grid Mon Apr 6 13:34:51 2020: End: Apply 30143796 to /u01/app/19.0.0.0/grid Mon Apr 6 13:34:51 2020: Start: Apply 30312546 to /u01/app/19.0.0.0/grid Mon Apr 6 13:36:33 2020: End: Apply 30312546 to /u01/app/19.0.0.0/grid Mon Apr 6 13:36:45 2020: Start: Pre Upgrade Cluster Check Mon Apr 6 13:38:32 2020: End: Pre Upgrade Cluster Check Mon Apr 6 13:38:32 2020: Start: Update Limits Mon Apr 6 13:38:32 2020: Skip raext already updated Skip root already updated HugePages already updated... Nothing to do. End: Update Limits Mon Apr 6 13:38:32 2020: End: Upgrade Recovery Appliance - Step [Stage] Next: <racli upgrade appliance --step=3> [root@zeroinsg01 ~]#

If you follow the log you will see these lines:

[root@zeroinsg01 radump]# tail -f /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log Mon Apr 6 13:24:23 2020: End: Create /usr/etc/ob/obnetconf on zeroinsg01.vel=error -l root zeroinsg01 /bin/echo "my host useonly: 99.201.99.60" > /usr/etc/ob/obnetconf Mon Apr 6 13:24:23 2020: End: Create IPv6 support Files. Mon Apr 6 13:24:23 2020: Start: on zeroinsg02. Mon Apr 6 13:24:23 2020: End: on zeroinsg02. Mon Apr 6 13:24:23 2020: Start: on zeroinsg01. Mon Apr 6 13:24:23 2020: End: on zeroinsg01. Mon Apr 6 13:24:23 2020: Setup: Directory /u01/app/19.0.0.0/grid - Completed Mon Apr 6 13:24:23 2020: Setup: Directory /u01/app/19.0.0.0/grid - Completed Mon Apr 6 13:24:23 2020: Set Command '/usr/bin/unzip -nuq /radump//V982068-01.zip -d /u01/app/19.0.0.0/grid' timeout to 900. ... ... Mon Apr 6 13:26:42 2020: Copying //radump//patch/ to zeroinsg02://radump Mon Apr 6 13:27:41 2020: End: Sync staged patches Mon Apr 6 13:27:41 2020: Start: Update OPatch Mon Apr 6 13:27:41 2020: Set Command '/opt/oracle.RecoveryAppliance/install/deploy_opatch.sh /u01/app/19.0.0.0/grid /radump//p6880880_180000_Linux-x86-64.zip' timeout to 300. Mon Apr 6 13:27:41 2020: End: Update OPatch Mon Apr 6 13:27:41 2020: Start: Apply 29850993 to /u01/app/19.0.0.0/grid Mon Apr 6 13:27:41 2020: Set Command '/u01/app/19.0.0.0/grid/gridSetup.sh -applyOneOffs //radump//patch//gi_opatchauto/29708769/29850993' timeout to 300. Mon Apr 6 13:29:13 2020: End: Apply 29850993 to /u01/app/19.0.0.0/grid Mon Apr 6 13:29:13 2020: Start: Apply 29851014 to /u01/app/19.0.0.0/grid Mon Apr 6 13:29:13 2020: Set Command '/u01/app/19.0.0.0/grid/gridSetup.sh -applyOneOffs //radump//patch//gi_opatchauto/29708769/29851014' timeout to 300. ... ... Mon Apr 6 13:36:45 2020: Set Command '/bin/su - oracle -c '/u01/app/19.0.0.0/grid/runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /u01/app/12.2.0.1/grid -dest_crshome /u01/app/19.0.0.0/grid -dest_version 19.3.0.0.0 -fixupnoexec -verbose'' timeout to 900. Mon Apr 6 13:38:32 2020: End: Pre Upgrade Cluster Check Mon Apr 6 13:38:32 2020: Switching to UID: 1001, GID: 1001 Mon Apr 6 13:38:32 2020: Start: Update Limits Mon Apr 6 13:38:32 2020: Set Command '/opt/oracle.RecoveryAppliance/install/update_memlock.sh ' timeout to 300. Mon Apr 6 13:38:32 2020: Skip raext already updated Skip root already updated HugePages already updated... Nothing to do. End: Update Limits Mon Apr 6 13:38:32 2020: Start: Getting Remote Nodes. Mon Apr 6 13:38:32 2020: End: Gathered Remote Nodes. Mon Apr 6 13:38:32 2020: Start: on zeroinsg02. Mon Apr 6 13:38:32 2020: End: on zeroinsg02. Mon Apr 6 13:38:32 2020: End: Upgrade Recovery Appliance - Step [Stage] Mon Apr 6 13:38:32 2020: End: RunLevel 1000 Mon Apr 6 13:38:32 2020: Start: RunLevel 2000

8 – Upgrade appliance, step=3

The next step is literally the upgrade of the ZDLRA database, catalog, and move to new binaries for GI and OH (if needed). The procedure is the same than before, just changing the value of the step:

[root@zeroinsg01 ~]# /opt/oracle.RecoveryAppliance/bin/racli upgrade appliance --step=3 Created log /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log Step [3 of 5]: <racli upgrade appliance> Mon Apr 6 13:40:13 2020: Start: Upgrade Recovery Appliance - Step [Upgrade] Mon Apr 6 13:40:13 2020: Start: Upgrade Recovery Appliance - Step [PreCheck] Mon Apr 6 13:40:13 2020: Start: Check ZDLRA Services Mon Apr 6 13:40:15 2020: End: Check ZDLRA Services Mon Apr 6 13:40:15 2020: Start: Check ASM rebalance Mon Apr 6 13:40:15 2020: End: Check ASM rebalance Mon Apr 6 13:40:15 2020: Start: Check Cluster Mon Apr 6 13:41:23 2020: End: Check Cluster Mon Apr 6 13:41:27 2020: Start: Check Open Incidents Mon Apr 6 13:41:27 2020: End: Check Open Incidents Mon Apr 6 13:41:27 2020: Start: Check Invalid Objects Mon Apr 6 13:41:28 2020: End: Check Invalid Objects Mon Apr 6 13:41:28 2020: Start: Check Init Parameters Mon Apr 6 13:41:29 2020: End: Check Init Parameters Mon Apr 6 13:41:29 2020: Start: Check compute node oracle access Mon Apr 6 13:41:30 2020: End: Check compute node oracle access Mon Apr 6 13:41:30 2020: Start: Check Patch Oracle Grid Mon Apr 6 13:41:35 2020: End: Check Patch Oracle Grid Mon Apr 6 13:41:35 2020: Start: Check Patch Oracle Database Mon Apr 6 13:41:41 2020: End: Check Patch Oracle Database Mon Apr 6 13:41:41 2020: End: Upgrade Recovery Appliance - Step [PreCheck] Mon Apr 6 13:41:41 2020: Start: Enable rasys Access Mon Apr 6 13:41:43 2020: End: Enable rasys Access Mon Apr 6 13:41:45 2020: Start: Grid Setup Mon Apr 6 13:49:27 2020: End: Grid Setup Mon Apr 6 13:49:27 2020: Start: Grid rootupgrade Mon Apr 6 14:11:13 2020: End: Grid rootupgrade Mon Apr 6 14:11:13 2020: Start: Grid ExecuteConfigTools Mon Apr 6 14:31:03 2020: End: Grid ExecuteConfigTools Mon Apr 6 14:31:03 2020: Skip: Update Tape Cluster Resources - [No Tape Option] Mon Apr 6 14:31:03 2020: Start: Deploy DB Home Mon Apr 6 14:36:47 2020: End: Deploying DB Home Mon Apr 6 14:36:47 2020: Start: on zeroinsg02. Mon Apr 6 14:36:48 2020: End: on zeroinsg02. Mon Apr 6 14:36:48 2020: Start: on zeroinsg01. Mon Apr 6 14:36:48 2020: End: on zeroinsg01. Mon Apr 6 14:36:48 2020: Start: Patch DB Home Mon Apr 6 14:41:38 2020: Start: Process Network Files [zeroinsg02] Mon Apr 6 14:41:38 2020: End: Process Network Files [zeroinsg02] Mon Apr 6 14:41:38 2020: Start: Process Network Files [zeroinsg01] Mon Apr 6 14:41:39 2020: End: Process Network Files [zeroinsg01] Mon Apr 6 14:41:39 2020: End: Patch DB Home Mon Apr 6 14:41:39 2020: Start: Pre Upgrade DB Updates Mon Apr 6 15:04:34 2020: End: Pre Upgrade DB Updates Mon Apr 6 15:04:34 2020: Start: RA DB Upgrade Mon Apr 6 15:28:29 2020: End: RA DB Upgrade Mon Apr 6 15:28:29 2020: Start: Shared FS Update Mon Apr 6 15:29:33 2020: Start: Create TNS Admin Mon Apr 6 15:31:02 2020: End: Create TNS Admin Mon Apr 6 15:31:02 2020: Start: Install Appliance Step 1 Mon Apr 6 15:31:03 2020: Start: Preinstall. Mon Apr 6 15:31:03 2020: Start: on zeroinsg01. Mon Apr 6 15:31:07 2020: End: on zeroinsg01. Mon Apr 6 15:31:08 2020: Start: on zeroinsg02. Mon Apr 6 15:31:11 2020: End: on zeroinsg02. Mon Apr 6 15:31:11 2020: End: Preinstall. Mon Apr 6 15:31:11 2020: Preinstall has completed successfully. Mon Apr 6 15:31:11 2020: End: Install Appliance Step 1 Mon Apr 6 15:31:13 2020: Start: Setup OS Mon Apr 6 15:31:45 2020: End: Setup OS Mon Apr 6 15:32:51 2020: Start: Setup RA Shared FS Mon Apr 6 15:32:52 2020: End: Setup RA Shared FS Mon Apr 6 15:32:52 2020: Start: Enable Wait For DBFS Mon Apr 6 15:32:52 2020: End: Enable Wait For DBFS Mon Apr 6 15:32:52 2020: Start: Upgrade Sys Package Mon Apr 6 15:33:02 2020: End: Upgrade Sys Package Mon Apr 6 15:33:02 2020: Start: Update Restart Flag Mon Apr 6 15:33:02 2020: End: Update Restart Flag Mon Apr 6 15:33:02 2020: End: Shared FS Update Mon Apr 6 15:33:02 2020: Start: RA Catalog Upgrade Mon Apr 6 15:35:26 2020: End: RA Catalog Upgrade Mon Apr 6 15:35:26 2020: Start: RA DB System Updates Mon Apr 6 15:35:56 2020: End: RA DB System Updates Mon Apr 6 15:35:57 2020: Start: Enable Rasys Mon Apr 6 15:43:52 2020: End: Enable Rasys Mon Apr 6 15:43:52 2020: Start: Post Upgrade Actions Mon Apr 6 15:46:14 2020: End: Post Upgrade Actions Mon Apr 6 15:46:14 2020: End: Upgrade Recovery Appliance - Step [Upgrade] Next: <racli upgrade appliance --step=4> [root@zeroinsg01 ~]#

As you can see above, several changes occurred, like:

- rootupgrade for new GI.

- Patch DB.

- Upgrade RA schema/database with new scripts.

- Setup OS with new parameters if needed.

- If not yet, change from DBFS to ACFS.

- Upgrade the ZDLRA catalog.

Under the hood some interesting info:

Mon Apr 6 13:42:46 2020: Service rep_dbfs on zeroinsg01 offline.

Mon Apr 6 13:42:46 2020: Service rep_dbfs off on all nodes.

Mon Apr 6 13:42:46 2020: Set Command '/bin/su - oracle -c '/u01/app/19.0.0.0/grid/gridSetup.sh -silent -responseFile /radump/grid_install.rsp'' timeout to 14400.

Mon Apr 6 13:49:27 2020: Grid Setup Output: 24

Mon Apr 6 13:49:27 2020: End: RunLevel 2001

Mon Apr 6 13:49:27 2020: Start: RunLevel 2002

Mon Apr 6 13:49:27 2020: End: Grid Setup

Mon Apr 6 13:49:27 2020: Start: Grid rootupgrade

Mon Apr 6 13:49:27 2020: Set Command '/u01/app/19.0.0.0/grid/rootupgrade.sh ' timeout to 14400.

…

…

Mon Apr 6 14:31:03 2020: End: RunLevel 2004

Mon Apr 6 14:31:03 2020: Start: RunLevel 2005

Mon Apr 6 14:31:03 2020: Start: Deploy DB Home

Mon Apr 6 14:31:03 2020: Set Command '/bin/su - oracle -c ''/u01/app/oracle/product/19.0.0.0/dbhome_1/runInstaller' -responsefile /radump/db_install.rsp -silent -waitForCompletion'' timeout to 3600.

Mon Apr 6 14:36:47 2020: End: Deploying DB Home

…

…

Processing in install validation mode.

Source root is /opt/oracle.RecoveryAppliance/zdlra

Destination root is /u01/app/oracle/product/19.0.0.0/dbhome_1/rdbms

Destination appears to be an installed shiphome.

library = libzdlraserver19.a

Performing object validation for libserver19.a.

Source library: /opt/oracle.RecoveryAppliance/zdlra/lib/libzdlraserver19.a

Destination library: /u01/app/oracle/product/19.0.0.0/dbhome_1/rdbms/../lib/libserver19.a

Using temporary directory /tmp/5X7pPWToJJ for source objects.

Using temporary directory /tmp/8rXvBcr_60 for destination objects.

…

…

- Linking Oracle

rm -f /u01/app/oracle/product/19.0.0.0/dbhome_1/rdbms/lib/oracle

…

Mon Apr 6 14:41:39 2020: Set Command '/u01/app/oracle/product/12.2.0.1/dbhome_1/bin/sqlplus -s / AS SYSDBA <<EOF

@/opt/oracle.RecoveryAppliance/install/dbmsrsadmpreq.sql;

@/opt/oracle.RecoveryAppliance/install/dbmsrsadm.sql;

@/opt/oracle.RecoveryAppliance/install/prvtrsadm.sql;

EOF

' timeout to 0.

Mon Apr 6 15:00:45 2020: End: Set init parameters for pre upgrage 12.2.

…

…

Mon Apr 6 15:05:13 2020: Set Command '/bin/su - oracle -c '/u01/app/oracle/product/19.0.0.0/dbhome_1/bin/dbua -silent -dbName zdlras'' timeout to 14400.

…

…

Database upgrade has been completed successfully, and the database is ready to use.

100% complete Mon Apr 6 15:28:29 2020: Set Command '/u01/app/oracle/product/12.2.0.1/dbhome_1/bin/srvctl status database -db zdlras -v' timeout to 900.

…

…

Mon Apr 6 15:33:02 2020: Set Command '/u01/app/oracle/product/19.0.0.0/dbhome_1/bin/rman catalog /@install.local cmdfile=/opt/oracle.RecoveryAppliance/install/upgrade.rman' timeout to 0.

…

…

Mon Apr 6 15:35:26 2020: End: Upgrade RA Catalog.

Mon Apr 6 15:35:26 2020: End: RunLevel 2010

Mon Apr 6 15:35:26 2020: Start: RunLevel 2011

Mon Apr 6 15:35:26 2020: End: RA Catalog Upgrade

Mon Apr 6 15:35:26 2020: Start: RA DB System Updates

Mon Apr 6 15:35:26 2020: Switching to UID: 1001, GID: 1002

Mon Apr 6 15:35:26 2020: SQL: BEGIN

DBMS_RA_ADM.UPDATE_DATAPUMP_DIR;

DBMS_RA_ADM.CREATE_NODE_DETAIL;

DBMS_RA_ADM.DISABLE_BCT;

DBMS_RA_ADM.CREATE_RA_CF('+DELTA');

DBMS_RA_ADM.LOCK_TABLE_STATS;

DBMS_RA_ADM.RECOMP_SERIAL;

DBMS_RA_ADM.GRANT_SYS_SYNONYMS;

DBMS_RA_ADM.GRANT_RASYS_PERMISSION;

DBMS_RA_ADM.CREATE_INIT_PARAM;

DBMS_RA_ADM.UPDATE_PGA_DB_PARAMETERS;

DBMS_RA_ADM.RAA_SET_DISPATCHERS;

DBMS_RA_ADM.CHECK_INIT_PARAM;

DBMS_RA_ADM.UPDATE_SL_TABLE;

DBMS_RA_ADM.UPDATE_THROTTLE;

DBMS_RA_ADM.UPDATE_INIT_PARAM;

DBMS_XDB_CONFIG.SETHTTPSPORT(0);

DBMS_XDB_CONFIG.SETHTTPPORT(8001);

DBMS_XDB.SETLISTENERENDPOINT(1,null,8001,1);

RASYS.DBMS_RA.CONFIG( '_nofilesize_mismatch_log', 1);

END;

…

…

Mon Apr 6 15:43:57 2020: spawn /usr/bin/ssh -o ConnectTimeout=20 -o LogLevel=error -l root zeroinsg01 unzip -o /opt/oracle.RecoveryAppliance/install/TFA-LINUX_v19.2.1.zip -d /u01/app/19.0.0.0/grid/tfa

Archive: /opt/oracle.RecoveryAppliance/install/TFA-LINUX_v19.2.1.zip

…

…

Mon Apr 6 15:46:14 2020: End: Upgrade Recovery Appliance - Step [Upgrade]

Some hints from this log:

- The files dbmsrsadmpreq.sql, dbmsrsadm.sql, prvtrsadm.sql is where most of ZDLRA tables and procedures came.

- DBUA for the upgrade.

- upgrade.rman (name self-described), upgrade rman.

- DBMS_RA_ADM runs occur because new sql (above) was loaded.

- TFA is upgraded to a special release (just for ZDLRA). In the future, AHF will be supported.

9 – Upgrade appliance, step=4, and step=5

The other steps (4 and 5) are responsible to finish the migration from DBFS to ACFS and to update OSB if needed (to talk with tape).

The procedure is the same, just need to change the number:

[root@zeroinsg01 ~]# /opt/oracle.RecoveryAppliance/bin/racli upgrade appliance --step=4 Created log /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log Step [4 of 5]: <racli upgrade appliance> Mon Apr 6 19:37:52 2020: Start: Migrate DBFS to ACFS Mon Apr 6 19:37:54 2020: Skip: Migrate DBFS to ACFS - [Not Required] Next: <racli upgrade appliance --step=5> [root@zeroinsg01 ~]# /opt/oracle.RecoveryAppliance/bin/racli upgrade appliance --step=5 Created log /opt/oracle.RecoveryAppliance/log/racli_upgrade_appliance.log Step [5 of 5]: <racli upgrade appliance> Mon Apr 6 19:38:00 2020: Start: Secure Backup Update Mon Apr 6 19:38:00 2020: Start: Tape Update Mon Apr 6 19:38:30 2020: End: Tape Update Mon Apr 6 19:38:30 2020: End: Secure Backup Update Step [5 of 5] - Completed. End OK. [root@zeroinsg01 ~]#

In this case, since the migration to ACFS was already made in the past, and the tape is not needed, these steps were fast.

Post Patching

After the upgrade finished some checks can be made to see if everything is fine. The checks for version and status can be used for that:

[root@zeroinsg01 ~]# racli version

Recovery Appliance Version:

exadata image: 19.2.3.0.0.190621

rarpm version: ra_automation-19.2.1.1.1.202001-31014797.x86_64

rdbms version: RDBMS_19.3.0.0.190416DBRU_LINUX.X64_RELEASE

transaction : kadjei_bug-31014797

zdlra version: ZDLRA_19.2.1.1.1.202001_LINUX.X64_RELEASE

[root@zeroinsg01 ~]#

[root@zeroinsg01 ~]#

[root@zeroinsg01 ~]# racli status appliance

zeroinsg01 db state: [ONLINE]

zeroinsg01 ra_server state: [ONLINE]

zeroinsg01 crs state: [ONLINE]

zeroinsg02 crs state: [ONLINE]

zeroinsg02 ra_server state: [ONLINE]

zeroinsg02 db state: [ONLINE]

[root@zeroinsg01 ~]#

[root@zeroinsg01 ~]#

[root@zeroinsg01 ~]# racli run check --all

Mon Apr 6 19:39:42 2020: Start: racli run check --all

Created log file zeroinsg01.zero.flisk.net:/opt/oracle.RecoveryAppliance/log/racli_run_check_20200406.1939.log

Mon Apr 6 19:39:45 2020: CHECK: RA Services - PASS

Mon Apr 6 19:39:53 2020: CHECK: Exadata Image Version - PASS

Mon Apr 6 19:39:54 2020: CHECK: Active Incidents - PASS

Mon Apr 6 19:40:02 2020: CHECK: Init Parameters - PASS

Mon Apr 6 19:40:03 2020: CHECK: Invalid Objects - PASS

Mon Apr 6 19:40:04 2020: CHECK: Export Backup - PASS

Mon Apr 6 19:40:04 2020: CHECK: ZDLRA Rasys Wallet - PASS

Mon Apr 6 19:40:07 2020: CHECK: Compute Node AlertHistory

Mon Apr 6 19:40:07 2020: HOST: [zeroinsg02] - PASS

Mon Apr 6 19:40:07 2020: HOST: [zeroinsg01] - PASS

Mon Apr 6 19:40:15 2020: CHECK: Storage Cell AlertHistory

Mon Apr 6 19:40:15 2020: HOST: [zerocadm05] - PASS

Mon Apr 6 19:40:15 2020: HOST: [zerocadm01] - PASS

Mon Apr 6 19:40:15 2020: HOST: [zerocadm04] - PASS

Mon Apr 6 19:40:15 2020: HOST: [zerocadm03] - PASS

Mon Apr 6 19:40:15 2020: HOST: [zerocadm06] - PASS

Mon Apr 6 19:40:15 2020: HOST: [zerocadm02] - PASS

Mon Apr 6 19:40:15 2020: CHECK: Oracle User Password Expires

Mon Apr 6 19:40:15 2020: HOST: [zeroinsg02] - PASS

Mon Apr 6 19:40:15 2020: HOST: [zeroinsg01] - PASS

Mon Apr 6 19:40:16 2020: CHECK: ZDLRA Version

Mon Apr 6 19:40:16 2020: HOST: [zeroinsg01] - PASS

Mon Apr 6 19:40:16 2020: HOST: [zeroinsg02] - PASS

Mon Apr 6 19:40:16 2020: End: racli run check --all

[root@zeroinsg01 ~]#

As you can see, the version of the rpm and ZDLRA are 19.2.1.1.1.202001-31014797. And all the services are up and running.

Issues and Know issues

If some error occurs during the patch procedure the recommendation is open SR with oracle to check. This is needed because (as explained before) ZDLRA is one appliance where the database and the contents are handled by Oracle. This means that we not create databases inside, or change any parameter (this includes GI). So, if an error occurs, open SR.

One note to check in case of a problem is the ZDLRA Detailed Troubleshooting Methodology (Doc ID 2408256.1). But the workflow is the same.

Some known issues that can solve previously:

- Stop-start EM Agent: The patch stop and start EM Agent in the nodes. Sometimes it can have issues with the agent. So, try to restart it before the patch to check if they are OK in both nodes

- SQLNET.ORA from GI: Check if the sqlnet.ora exists in GI home. If it does not exists, it can be a problem. The same is true for OH.

- HAIP: If upgrading to the 19c version, it is needed to remove HAIP. I already explained it here.

- Running tasks: Check if there are running tasks at the rasys.ra_task table. It is recommended that the upgrade takes place when fewer tasks are running. I recommend not upgrade if you have INDEX_BACKUP task running to avoid stop the task in one version and return in another version.

Upgrade and Replication

Since ZDLRA can be used in replicated mode, where the upstream send to downstream, it is important that whoever receives the backup can do that. This means that it is recommended to upgrade always the downstream first. This allows that when receiving the backups from upstream, the internal ZDLRA database and the library can handle it.

References

Some references about how to do the patch and handle the issues:

- Zero Data Loss Recovery Appliance Supported Versions (Doc ID 1927416.1)

- ZDLRA Release and Patching Policy (Doc ID 2410137.1)

- ZDLRA Upgrade and Patching Troubleshooting Guide (Doc ID 2639262.1)

- Zero Data Loss Recovery Appliance Upgrade and Patching (Doc ID 2028931.1)

- ZDLRA Detailed Troubleshooting Methodology (Doc ID 2408256.1)

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”

Hi Fernando.

Great article…With regards to replication…”This means that is recommended to upgrade always the downstream first.”

But if you have ZDLRAs and both perform the function of an upgrade and downstream albeit for different databases than you do not have a choice.

We have Site A and Site B and databases in A have backups replicated to Site B and vice-versa.

Also to go from 12.1 to 19 we have had to perform a two step upgrade process (since a direct upgrade would have broken replication)

Hi,

In this case, I recommend stopping the replication until both sides are in the same version.

This can be one option (pausing the replication). This avoids “share” data/backups between them.

Pingback: ZDLRA, Patch/Update the Recovery Appliance | Fernando Simon

Pingback: ZDLRA patching | Amardeep Sidhu

Hi Fernando , Thanks for such a nice article. is it possible to have ZDLRA patching in rolling fashion ?

Hello,

You can patch the Exadata stack online, in rolling mode. So, for Exadata software (Linux image) you patch each storage cell (one after another). And for the dbnodes, one after another.

For ZDLRA, as I know, it is not possible to do online. You ZDLRA software needs to be offline.

If you need to have HA, you need to use second ZDLRA and configure HA backup over both to avoid the downtime.

If you have DG, and multiple ZDLRA, you can use different archivelog_dest. So each dest points to dedicated ZDLRA.

Hope that it helps.

Fernando Simon