Exadata X8M model it was released in the Oracle Open World 2019 and the new feature, “M”, was aimed to reduce the latency and increase the IOPS. The Exadata X8M uses the Remote Direct Memory (RDMA) to allow database access the storage server memory directly. And the memory, in this case, it is special, X8M uses Intel Optane DC Persistent Memory modules (DIMM/NVDIMM – Non Volatile DIMM – to provide PMEM – Persistent Memory) attached directly at storage server and these can be accessed directly from the database using RDMA trough RoCE network. Let’s check the details to see what it is.

The image above was donated by my friend Bruno Reis. The first image that I used (instead of this one above), was based/found on this link. The social media, LinkedIn/Twitter still cache/reference the old one. I forgot to add the correct source when published the post for the first time.

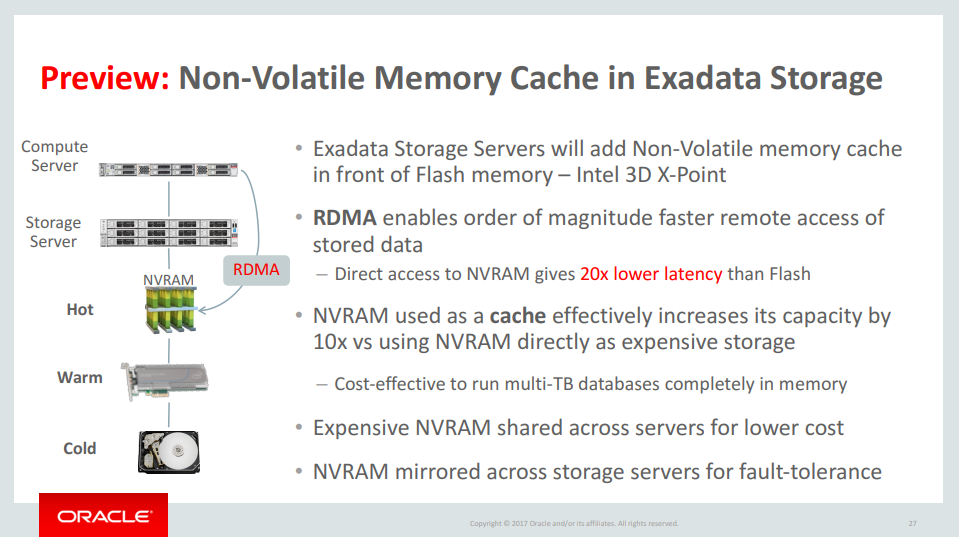

As you can see in the Youtube video release for Exadata X8M (New Exadata X8M PMEM and RoCE capabilities and benefits) and as you can see here in the post at Oracle Blogs about Exadata Persistent Memory Accelerator, the goal to do that is speedup access. Mainly for OLTP database. But the way that Oracle does that for Exadata was already covered (as preview) in several past OOW presentations:

The image above came from the PDF from presentation “Exadata Technical Deep Dive: Architecture and Internals” available at Oracle Web Site. Or even post from Oracle Blog that I mentioned before.

Basically, very basically, the PMEM acts as a cache in front of Flash. I will say basically because it is not just/as cache, a lot of some internal things change and need to be addressed. One layer of 1.5TB of PMEM per storage was added per each storage node.

To understand everything, we need to check and remember some details.

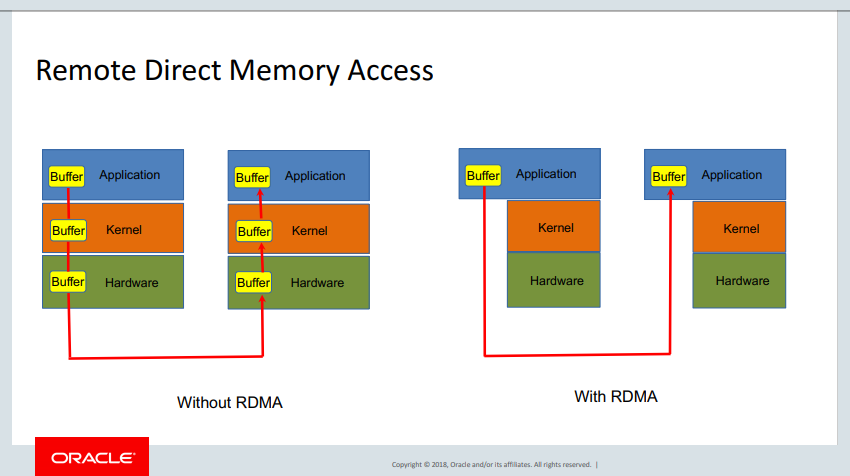

As you saw before, it relies on RDMA to allow the database to access the memory of the storage server. This occurs because RDMA “provides efficient and direct access to host or client memory without involving processor overhead”, basically allow direct access to memory and this cuts some buffers and latencies in the middle. The image below shows the basic layout (this image was taken from OOW 2018 presentation “How Oracle Linux Delivers Superior Application Scalability for Exadata” that it is available at Oracle website – and I recommend to read it because have a lot of good information about RDMA). Even it is related to InfiniBand, the technical data it good and a lot of things remains the same:

Talking about RoCE (RDMA over Converged Ethernet), the change was needed to handle everything. Now you need to handle the “normal I/O” plus the “low latency read I/O”. And if you consolidate databases in Exadata more power from it is needed. If you want to understand in more details the integration from RDMA, check the white paper “Delivering Application Performance with Oracle’s InfiniBand Technology” from Oracle. You can check the Wikipedia page too, and here too.

PMEM

With this review and fresh in mind we can check in more details PMEM. This “new feature” is more than a simple cache in front of the flash. It relies on dedicated memory DIMM (Intel Optane) and specific protocol and channel (RDMA, and RoCE).

All of the information from this topic about PMEM I summarized from the presentation “Exadata with Persistent Memory: An Epic Journey” about persistent memory done by Oracle at “PERSISTENT PROGRAMMING IN REAL LIFE – PIRL’19”. You can even watch the video from the presentation on YouTube: https://www.youtube.com/watch?v=pNuczhPB55U (I recommend).

About Intel Optane you can read more directly from Intel Optane DC site, or even the Intel page about the partnership about Intel and Oracle (and more details here too). If you want to dig more, you can check here what are the DIMM modules/expansion, or really tech details about the modules and libraries here.

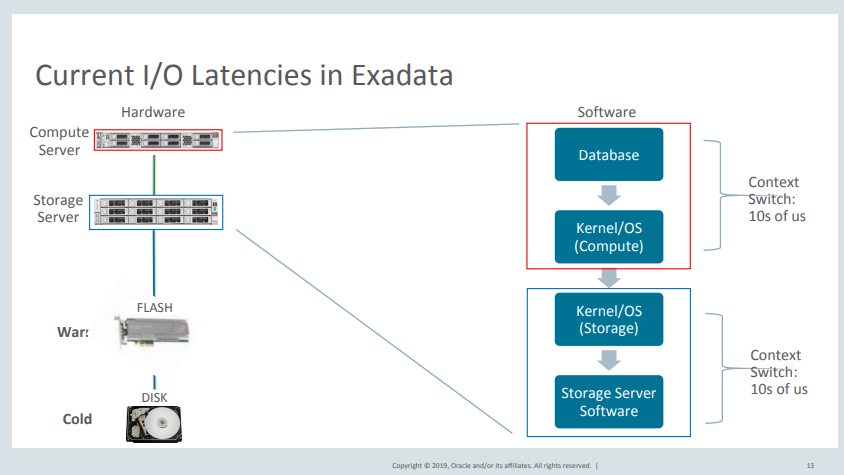

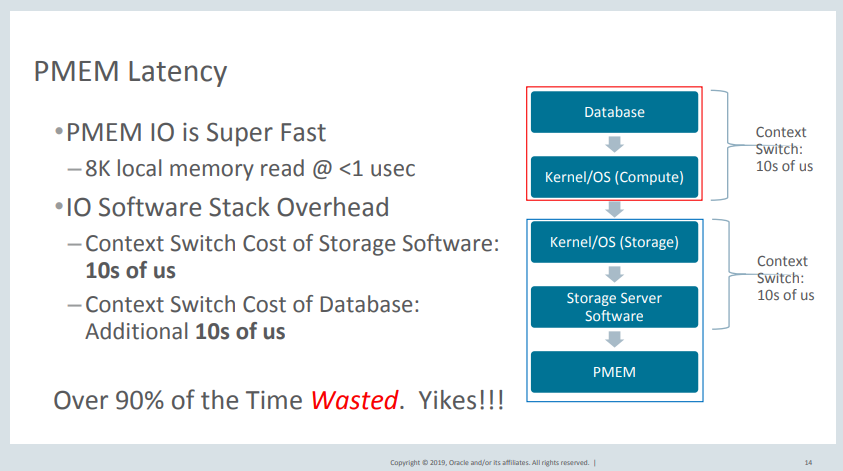

But why PMEM is so different from Flash? The detail here it is even with flash, all the I/O need to pass to storage server stack and kernel part of the database server. This means some latency:

The idea is to bypass everything and link database directly with the PMEM memory from Storage Server. And with Exadata, this easily can be done because the RDMA protocol is ZDP (Zero-loss Zero-Copy Datagram Protocol) and when you integrate everything less CPU is needed. Some part of this direct access came from DAX (Direct Access) Intel library that allows access to PMEM. If you want, can read more here and here.

The data that are stored in PMEM memory pass over the same process that happens with disk and flash of Exadata Storage (ASM). And since it is the same, by Exadata design, it is mirrored across other storage servers in the rack.

Some interesting details here are “One Side”, and “Two Side” paths. PMEM cache, And the “Torn Blocks”:

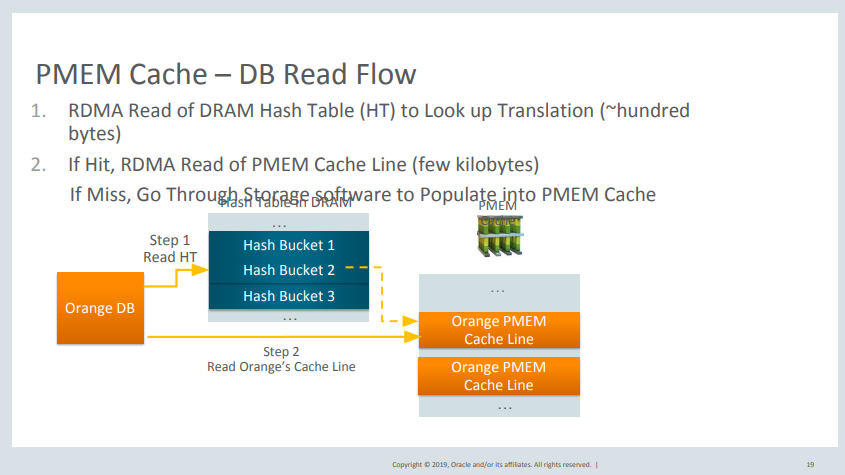

PMEM Cache/HASH: It is the hash table that maps the desired information (data) inside of memory. The analogy here is the same than “Page table” and TLB for memory access. You can read more here.

One Side path: Understand this as the lowest/fastest path to access data. Occurs when the database read directly from PMEM. The database over RDMA request the PMEM hash table and after executed second access (over RDMA again) to read the desired data.

Two side read/write: follow the traditional path. The access passes from database to database server kernel, after to storage kernel, storage software, and at the end memory.

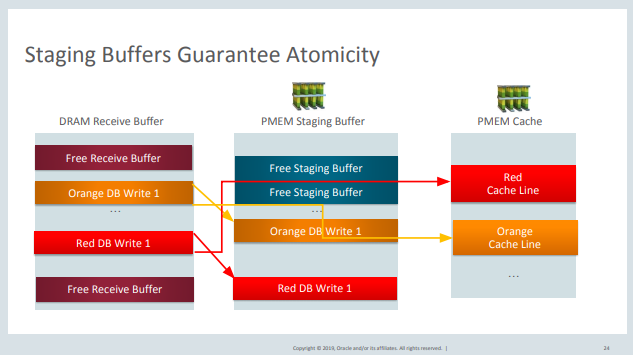

Torn block: This means/lead corruption at block stored at PMEM memory. This type of problem occurs because (by the library) the access to memory block is done by 8bytes segments and since database blocks are bigger than that (and Oracle just check the begin and end of each block) you can have blocks in PMEM memory that have header and footer with information different than the body. To address that, Oracle put in PMEM one “staging buffer” to (and when writing a block at PMEM) first write on staging buffer and when having the guaranteed the write, pass it to the main PMEM memory that can be read by the database.

But this is not simple. Since it is persistent memory, some control needs to be done to guarantee that what was write at PMEM, remains there. From the mirror part, the mirror is done from database/ASM itself (in the same way than flash/disk). To guarantee the data persistent, the Oracle uses ADR (Asynchronous DRAM Refresh) domain to protect memory controller and PMEM itself.

In the end, there are some controls to avoid data I/O failures when the DIMM memory error occurs. But as told in the presentation, some improvement needs to be done in this area to allow writes to be done in “One Side Path”.

Why do that?

You can ask why to do that? As told before, the PMEM differs from flash because the access is direct from the database side. The stack to access the data is cut and the latency reduces a lot. Of course, that the size will be less compared with flash/disk size, but depending on the application that you have (or need to delivery), it is the cost that you pay.

And of course, because other players are doing that and cutting Oracle.

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”

Pingback: Exadata X8M, Workshop | Fernando Simon