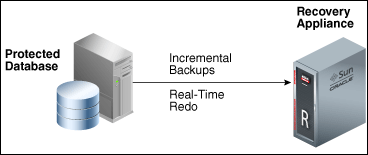

Real-time redo transport is the feature that allows you to reduce to zero the RPO (Recovery Point Objective) for your database. Check how to configure real-time redo, the steps, parameters, and other details that need to be modified to enable it.

The idea behind real-time redo transport it is easy, basically the ZDLRA it is a remote destination for your redo log buffers/archivelogs of your database. It is really, really, similar to what occurs for data guard configurations (but here you don’t need to set all datafiles as an example). It is not the same too because ZDLRA can detect if the database stops/crash and will generate the archivelog (at ZDLRA side) with all the received redo and this can be used to restore to, at least zero/sub-seconds, of data loss.

Using real-time redo it is the only way to reach RPO zero. With other features of ZDLRA, you can have a better backup window time (but just that) using incremental backups. Just using real-time redo you reach zero RPO and this impacts directly how to configure for MAA compliance. There are a lot of options and level of protection for MAA that you can check at “Maximum Availability Architecture (MAA) – On-Premises HA Reference Architectures 2019”, “Maximum Availability Architecture Best Practices for Oracle Cloud”, “Oracle MAA Reference Architectures”, “Maximum Availability Architecture – Best Practices for Oracle Database 19c”.

This post starts from one environment that you already enrolled in the database at ZDLRA. I already wrote about how to do that, you can check here in my previous post. This is the first post about real-time redo, here you will see how to configure and verify it is working.

Pre-Check

The way that I configure real-time redo for database it is a little different than what is in the official docs. I added some pre-checks and another order for some steps, but the result is the same. If you want to check the official steps you can check at Zero Data Loss Recovery Appliance Protected Database Configuration Guide or at Zero Data Loss Recovery Appliance Administrator’s Guide.

Just contextualization of environment:

- ZDLRA database name: zdlras1

- Database name: orcl19

- VPC user: cat_zdlra

Check Configuration

The first step that I do is verify the current configuration. Mainly the preexistence of wallet:

[oracle@orcloel7 ~]$ mkstore -wrl /u01/app/oracle/product/19.3.0.0/dbhome_1/dbs/ra_wallet -listCredential Oracle Secret Store Tool Release 19.0.0.0.0 - Production Version 19.3.0.0.0 Copyright (c) 2004, 2019, Oracle and/or its affiliates. All rights reserved. List credential (index: connect_string username) 1: zdlras1-scan:1521/zdlras1:CAT_ZDLRA cat_zdlra [oracle@orcloel7 ~]$ [oracle@orcloel7 ~]$ cat $ORACLE_HOME/dbs/raORCL19.ora RA_WALLET='LOCATION=file:/u01/app/oracle/product/19.3.0.0/dbhome_1/dbs/ra_wallet CREDENTIAL_ALIAS=zdlras1-scan:1521/zdlras1:CAT_ZDLRA' [oracle@orcloel7 ~]$

The most import here is the credential name (zdlras1-scan:1521/zdlras1:CAT_ZDLRA in this case) because it needs to be the same name for the database file that points to RA_WALLET. The config file is for each database/instance and has the name ra<DBNAME>.ora (it was already configured by the previous post pointed before).

After that, I check if the sqlnet.ora and tnsnames.ora have the information for wallet and TNS entry respectively:

[oracle@orcloel7 ~]$ cat $ORACLE_HOME/network/admin/sqlnet.ora

NAMES.DIRECTORY_PATH= (TNSNAMES, EZCONNECT)

SQLNET.WALLET_OVERRIDE = true

WALLET_LOCATION =

(

SOURCE =

(METHOD = FILE)

(METHOD_DATA =

(DIRECTORY = /u01/app/oracle/product/19.3.0.0/dbhome_1/dbs/ra_wallet)

)

)

[oracle@orcloel7 ~]$

[oracle@orcloel7 ~]$ tnsping zdlras1

TNS Ping Utility for Linux: Version 19.0.0.0.0 - Production on 01-OCT-2019 23:30:36

Copyright (c) 1997, 2019, Oracle. All rights reserved.

Used parameter files:

/u01/app/oracle/product/19.3.0.0/dbhome_1/network/admin/sqlnet.ora

Used TNSNAMES adapter to resolve the alias

Attempting to contact (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = zdlras1-scan)(PORT = 1521)) (CONNECT_DATA = (SERVICE_NAME = zdlras1)))

OK (0 msec)

[oracle@orcloel7 ~]$

Check Database Configuration

After check outside of the database config, it is important to check some database requirements. The most import is verifying if REMOTE_LOGIN_PASSWORDFILE it is set as “exclusive” or “shared”:

[oracle@orcloel7 ~]$ sqlplus / as sysdba SQL*Plus: Release 19.0.0.0.0 - Production on Tue Oct 1 23:32:09 2019 Version 19.3.0.0.0 Copyright (c) 1982, 2019, Oracle. All rights reserved. Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.3.0.0.0 SQL> SQL> show parameter REMOTE_LOGIN_PASSWORDFILE; NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ remote_login_passwordfile string EXCLUSIVE SQL> SQL>

Other parameters to verify are log_archive_config and db_unique_name. They are important to verify the current configuration in cases of databases using DG as an example.

Configuring Real-Time Redo

After these pre-checks, we can start the configuration. Here I set everything at spfile to, after configuring everything, bounce the database and start with everything up and running.

REDO_TRANSPORT_USER

It is the parameter that defines the user that the database utilizes to send the redo to another database. This user needs to exist in both databases (for DG), and because of that needs to exist in ZDLRA. When using ZDLRA and real-time redo it needs to be the same as VPC inside the database. So, the same VPC user needs to exists in database side and set for this parameter:

SQL> show parameter redo_transport_user; NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ redo_transport_user string SQL> SQL> alter system set redo_transport_user='CAT_ZDLRA' scope = spfile SID='*'; System altered. SQL> SQL> create user cat_zdlra identified by s3nhazdlra; User created. SQL> grant sysoper to cat_zdlra; Grant succeeded. SQL>

As you can see the user CAT_ZDLRA was created inside of the database. The user needs to have at least the grant SYSOPER to work properly.

LOG_ARCHIVE_CONFIG

This parameter works the same for DG environments, and since ZDLRA real-time redo it is a remote destination for archive, we do the same. Here, we set this specifying the database unique name for ZDLRA here (zdlras1 in my current environment):

SQL> show parameter log_archive_config; NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ log_archive_config string SQL> SQL> show parameter db_unique_name; NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ db_unique_name string ORCL19 SQL> SQL> ALTER SYSTEM SET log_archive_config='DG_CONFIG=(orcl19,zdlras1)' SCOPE=SPFILE SID='*'; System altered. SQL>

If you already have DG configured, you need to just add the entry for ZDLRA database here in the parameter.

LOG_ARCHIVE_DEST_n

SQL> ALTER SYSTEM SET log_archive_dest_2='SERVICE="zdlras1-scan:1521/zdlras1:CAT_ZDLRA" ASYNC NOAFFIRM delay=0 optional compression=disable max_failure=1 max_connections=1 reopen=10 DB_UNIQUE_NAME=zdlras1 net_timeout=8 group=1 priority=1 VALID_FOR=(ONLINE_LOGFILE,ALL_ROLES)' scope = spfile sid = '*'; System altered. SQL> SQL> alter system set log_archive_dest_state_2=DEFER scope = spfile sid = '*'; System altered. SQL>

The secrets are:

- SERVICE: it is the connection to ZDLRA. It can be a TNS service name, or an ezconnect. If you sawm I have the credential name, as the same of ezconnection. I recommend to use this, so you know what the credential it is for. If you use TNS entry for SERVICE, recommend having a credential name with the same name.

- ASYNC NOAFFIRM: This is controversy (I will explain later), but this defines the mode that redo log is shipped for the remote destination.

- DB_UNIQUE_NAME: Database unique name of recovery appliance database.

All the others are the normal parameters for DG config and you can check at LOG_ARCHIVE_DEST_n Parameter Attributes.

As I told before, the ASYNC NOAFFIRM are controversial because if you look it was defined as ASYNC, and it is the same in the ZDLRA docs. You can read here at “Protection of Ongoing Transactions” (or at “How Real-Time Redo Transport Works”):

“Redo data from the protected database is written asynchronously to the Recovery Appliance as it is generated. Load on production database servers is minimized because redo data is shipped directly from the memory to the Recovery Appliance without the involvement of disk I/O”

“To avoid degrading the performance of the protected database, protected databases transfer redo asynchronously to the Recovery Appliance. If a protected database is lost, zero to subsecond data loss is expected in most cases.”

The controversy here is because the appliance it is ZERO DATA LOSS, and ASYNC does not guarantee zero data loss. So, is it a scam case and we need to change the name for Non-Zero Data Loss Recovery Appliance? No, they just are trying to remember you that to minimize the impact over the database, it was configured in async mode. But you can configure in SYNC mode, it is allowed, but remember that this can/will impact the database.

If you want to understand the impact over that SYNC/ASYNC/AFFIRM/NOAFFIRM have over the database, read the “Best Practices for Synchronous Redo Transport Data Guard and Active Data Guard” doc. It is fundamental.

I will cover this in the next post about real-time redo.

Using Real-Time Redo

After pre-check and configure all the parameters above, we can start to use it. If you have some part already configured (like the redo transport user, and the wallet), you can config without restart database. But, here, I made the restart of the database (and enabled the log_archive_dest_state_n).

The best way to show/check real-time redo working is through rman, where I can describe the archivelogs and backups that exist for each one:

[oracle@orcloel7 ~]$ rman target=/ catalog=cat_zdlra/s3nhazdlra@zdlras1

Recovery Manager: Release 19.0.0.0.0 - Production on Tue Oct 1 23:57:42 2019

Version 19.3.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to target database: ORCL19 (DBID=310627084)

connected to recovery catalog database

RMAN> list copy of archivelog all;

specification does not match any archived log in the repository

RMAN> alter system archive log current;

Statement processed

RMAN> list copy of archivelog all;

List of Archived Log Copies for database with db_unique_name ORCL19

=====================================================================

Key Thrd Seq S Low Time

------- ---- ------- - -------------------

4329 1 47 A 02-10-2019_00:09:32

Name: /u01/app/oracle/oradata/ORCL19/archivelog/2019_10_02/o1_mf_1_47_gs7mzgkn_.arc

RMAN> list backup of archivelog all completed after "sysdate - 5/1440";

List of Backup Sets

===================

BS Key Size Device Type Elapsed Time Completion Time

------- ---------- ----------- ------------ -------------------

4365 595.50K SBT_TAPE 00:00:00 02-10-2019_00:15:34

BP Key: 4366 Status: AVAILABLE Compressed: YES Tag: TAG20191002T001534

Handle: $RSCN_1920977_RTIM_1009298641_THRD_1_SEQ_47_CTKEY_4222_BACKUP Media:

List of Archived Logs in backup set 4365

Thrd Seq Low SCN Low Time Next SCN Next Time

---- ------- ---------- ------------------- ---------- ---------

1 47 2370920 02-10-2019_00:09:32 2372594 02-10-2019_00:15:10

RMAN>

Look the example above, I started with no copy of archivelogs locally. After I made a switch for archivelog and sequence 47 was generated. Instantly, if I do a list backup archivelog you can see that already exists one copy of the same sequence.

For the copy, you can identify that it is a real-time redo and not a copy because of the handle. It has a specific format starting as $RSCN.

If I do one more time, the same behavior:

RMAN> list copy of archivelog all;

List of Archived Log Copies for database with db_unique_name ORCL19

=====================================================================

Key Thrd Seq S Low Time

------- ---- ------- - -------------------

4329 1 47 A 02-10-2019_00:09:32

Name: /u01/app/oracle/oradata/ORCL19/archivelog/2019_10_02/o1_mf_1_47_gs7mzgkn_.arc

RMAN> alter system archive log current;

Statement processed

RMAN> list copy of archivelog all;

List of Archived Log Copies for database with db_unique_name ORCL19

=====================================================================

Key Thrd Seq S Low Time

------- ---- ------- - -------------------

4329 1 47 A 02-10-2019_00:09:32

Name: /u01/app/oracle/oradata/ORCL19/archivelog/2019_10_02/o1_mf_1_47_gs7mzgkn_.arc

4406 1 48 A 02-10-2019_00:15:10

Name: /u01/app/oracle/oradata/ORCL19/archivelog/2019_10_02/o1_mf_1_48_gs7n1ck8_.arc

RMAN> list backup of archivelog all completed after "sysdate - 5/1440";

List of Backup Sets

===================

BS Key Size Device Type Elapsed Time Completion Time

------- ---------- ----------- ------------ -------------------

4365 595.50K SBT_TAPE 00:00:00 02-10-2019_00:15:34

BP Key: 4366 Status: AVAILABLE Compressed: YES Tag: TAG20191002T001534

Handle: $RSCN_1920977_RTIM_1009298641_THRD_1_SEQ_47_CTKEY_4222_BACKUP Media:

List of Archived Logs in backup set 4365

Thrd Seq Low SCN Low Time Next SCN Next Time

---- ------- ---------- ------------------- ---------- ---------

1 47 2370920 02-10-2019_00:09:32 2372594 02-10-2019_00:15:10

BS Key Size Device Type Elapsed Time Completion Time

------- ---------- ----------- ------------ -------------------

4445 41.00K SBT_TAPE 00:00:00 02-10-2019_00:16:32

BP Key: 4446 Status: AVAILABLE Compressed: YES Tag: TAG20191002T001632

Handle: $RSCN_1920977_RTIM_1009298641_THRD_1_SEQ_48_CTKEY_4294_BACKUP Media:

List of Archived Logs in backup set 4445

Thrd Seq Low SCN Low Time Next SCN Next Time

---- ------- ---------- ------------------- ---------- ---------

1 48 2372594 02-10-2019_00:15:10 2372793 02-10-2019_00:16:11

RMAN>

Another point is that if you try to do the backup of this archivelog you will see that they was already backed:

RMAN> BACKUP DEVICE TYPE SBT FILESPERSET 100 FORMAT '%U' ARCHIVELOG ALL NOT BACKED UP TAG 'BKP-ARCH'; Starting backup at 02-10-2019_00:18:30 current log archived released channel: ORA_DISK_1 allocated channel: ORA_SBT_TAPE_1 channel ORA_SBT_TAPE_1: SID=86 device type=SBT_TAPE channel ORA_SBT_TAPE_1: RA Library (ZDLRAS1) SID=93E18A9FC482277FE053010310AC3886 skipping archived logs of thread 1 from sequence 47 to 48; already backed up … …

Real-Time Redo… in real

The real-time redo transport configuration that I showed here just covered the configuration part. You can use it as a guide do understand what you need to configure and check. But this post does not cover full details about it.

For the next post about real-time redo, I will explore more details about the controversy about SYNC/ASYNC mode for transport. I will test and simulate other details, like a complete crash of the environment and verify if everything will be safe and we reach zero RPO.

But in the end, we have the full “Delta Push” for our database. The incremental forever strategy and real-time redo enabled.

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”

Pingback: ZDLRA, Real-Time Redo and Zero RPO | Fernando Simon

Pingback: ZDLRA, Multi-site protection - ZERO RPO for Primary and Standby | Fernando Simon

Pingback: ZDLRA, Virtual Private Catalog User - VPC | Fernando Simon

Hi ,

after Configuring Real-Time Redo, we are getting rman issue as below.

RMAN-08120: warning: archived log not deleted, not yet applied by standby

i don’t have standby DB. i just configure Real-Time Redo to ZDLRA.

Hi,

Please look my post: https://www.fernandosimon.com/blog/zdlra-dataguard-archivelogs-and-rman-08137/

Sorry to reply so late.

Hi Simon,

thanks for your reply.

How can we monitor ZDLRA, Real-Time Redo status ? in case of any network failure while transfering archives from DB server to ZDLRA ?

any select query to check the GAP -or- status ?

i can see Error messages reporting under v$rman_status view , but this view is reporting DB backup errors also.

so, do we have any specific view to monitor ZDLRA , Real-Time Redo apply status ?

Hi,

You can check inside of ZDLRA the table “ra_database”, when you have real-time redo the column NZDL_ACTIVE report YES, and the UNPROTECTED_WINDOW report the last received. So, if you have some problem, network, you can monitor this column to check.

Another is to check the V$DATAGUARD_PROCESSES inside ZDLRA.

Bryan made some posts about this: https://bryangrenn.blogspot.com/2020/02/what-are-those-processes-in.html and https://bryangrenn.blogspot.com/2019/11/dataguard-and-logarchivemaxprocess.html

Good information…. really useful…..

Hello Fernando,

In a classical dataguard configuration, it is possible to monitor the lag of the standby compared to the primary by querying v$standby_event_histogram on the standby.

This view does not seem to be populated on zdlra. The RA_* views do not seem to provide such information. Do you have any idea on how to monitor the lag when the standby is replaced by a zdlra system ?

Thanks

Hi,

Sorry about the delay to reply.

You can use the RA_DATABASE and check the NZDL_ACTIVE column. Others columns over this column show you unsynchronized/unprotect time. If using real-time redo transport it will tend to zero.

Best regards.

Does ZDLRA store archivelogs receive from Protected database which it sent ?? How it is managed from overfilling ?? How should we delete it in ZDLRA appliance ??

Hello,

Sorry but I not fully understood the question.

The archivelog follow your backup policy (the same from datafiles backups).

Hope that it helps.

Fernando Simon