With the release of the 21c of Oracle Database is time to study new features. The 21c version of Grid Infrastructure (and ASM) was released and an upgrade from orders versions can be executed. It is not a complex task, but some details need to be verified. In this post, I will show the steps to upgrade the Grid Infrastructure to 21c. If you need to upgrade from 18c to 19c you can check my previous post.

Planning

The first step that you need to do is plan everything. You need to check the requirements, read the docs, download files, and plan the actions. While I am writing this post, there is no official MOS docs about how to upgrade the GI to 19c. The first place to the procedure is the official doc for GI Installation and Upgrade, mainly chapter 11. And another good example is 19c Grid Infrastructure and Database Upgrade steps for Exadata Database Machine running on Oracle Linux (Doc ID 2542082.1).

So, what you need to consider:

- OS version: If it is compatible with 21c and if you are using asmlib or asm filter, check kernel modules and certification matrix.

- Current GI: Maybe you need to apply some patches. The best practice recommends using the last version.

- Used features (like AFD, HAIP, Resources): Check compatibilities of the old features with 21c. Maybe you need to remove HAIP or change your crs resources.

- 21c requirements for GI: Check memory, space, and database versions.

- Oracle Home patches (for databases running): Check if you need to apply some patches for your database to be compatible with GI 21c.

- Backup of your Databases: Just in case you need to roll back something.

My environment

The environment that I am using for this example is:

- Oracle Linux 8.4.

- GI cluster with two nodes.

- ASM Filter for disk access.

- 19.11 for GI.

- 19.12 for Oracle Home database.

I personally recommend upgrading your current GI to 19c before upgrade or apply one of the last PSU for your running version. This avoids a lot of errors since most of the know bugs will be patched. Check below my environment:

[root@oel8n1 ~]# uname -a

Linux oel8n1.oralocal 5.4.17-2102.201.3.el8uek.x86_64 #2 SMP Fri Apr 23 09:05:57 PDT 2021 x86_64 x86_64 x86_64 GNU/Linux

[root@oel8n1 ~]#

[root@oel8n1 ~]#

[root@oel8n1 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux release 8.4 (Ootpa)

[root@oel8n1 ~]# su - grid

[grid@oel8n1 install]$ $ORACLE_HOME/OPatch/opatch lspatches

32399816;OJVM RELEASE UPDATE: 19.11.0.0.210420 (32399816)

32585572;DBWLM RELEASE UPDATE 19.0.0.0.0 (32585572)

32584670;TOMCAT RELEASE UPDATE 19.0.0.0.0 (32584670)

32579761;OCW RELEASE UPDATE 19.11.0.0.0 (32579761)

32576499;ACFS RELEASE UPDATE 19.11.0.0.0 (32576499)

32545013;Database Release Update : 19.11.0.0.210420 (32545013)

OPatch succeeded.

[grid@oel8n1 install]$

[grid@oel8n1 install]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE oel8n1 STABLE

ONLINE ONLINE oel8n2 STABLE

ora.chad

OFFLINE OFFLINE oel8n1 STABLE

OFFLINE OFFLINE oel8n2 STABLE

ora.net1.network

ONLINE ONLINE oel8n1 STABLE

ONLINE ONLINE oel8n2 STABLE

ora.ons

ONLINE ONLINE oel8n1 STABLE

ONLINE ONLINE oel8n2 STABLE

ora.proxy_advm

OFFLINE OFFLINE oel8n1 STABLE

OFFLINE OFFLINE oel8n2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE oel8n1 STABLE

2 ONLINE ONLINE oel8n2 STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE oel8n1 STABLE

2 ONLINE ONLINE oel8n2 STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE oel8n2 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE oel8n1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE oel8n1 STABLE

ora.RECO.dg(ora.asmgroup)

1 ONLINE ONLINE oel8n1 STABLE

2 ONLINE ONLINE oel8n2 STABLE

ora.SYSTEMDG.dg(ora.asmgroup)

1 ONLINE ONLINE oel8n1 STABLE

2 ONLINE ONLINE oel8n2 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE oel8n1 Started,STABLE

2 ONLINE ONLINE oel8n2 Started,STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE oel8n1 STABLE

2 ONLINE ONLINE oel8n2 STABLE

ora.cdb19t.db

1 ONLINE ONLINE oel8n1 Open,HOME=/u01/app/o

racle/product/19.0.0

.0/dbhome_1,STABLE

2 ONLINE ONLINE oel8n2 Open,HOME=/u01/app/o

racle/product/19.0.0

.0/dbhome_1,STABLE

ora.cvu

1 ONLINE ONLINE oel8n1 STABLE

ora.oel8n1.vip

1 ONLINE ONLINE oel8n1 STABLE

ora.oel8n2.vip

1 ONLINE ONLINE oel8n2 STABLE

ora.qosmserver

1 ONLINE ONLINE oel8n1 STABLE

ora.scan1.vip

1 ONLINE ONLINE oel8n2 STABLE

ora.scan2.vip

1 ONLINE ONLINE oel8n1 STABLE

ora.scan3.vip

1 ONLINE ONLINE oel8n1 STABLE

--------------------------------------------------------------------------------

[grid@oel8n1 install]$

At the end of the post, I attached the log of and GI upgrade from 19.5 to 21c, and this particular GI runs using ASMLIB. The server in this case runs at OEL 7.6.

Upgrading

Creating folders

After requirements meet, you can create the folders for your GI in all nodes of the cluster:

[root@oel8n1 ~]# mkdir -p /u01/app/21.0.0.0/grid [root@oel8n1 ~]# chown grid /u01/app/21.0.0.0/grid [root@oel8n1 ~]# chgrp -R oinstall /u01/app/21.0.0.0/grid [root@oel8n1 ~]# #################################### # # Second NODE # #################################### [root@oel8n2 ~]# mkdir -p /u01/app/21.0.0.0/grid [root@oel8n2 ~]# chown grid /u01/app/21.0.0.0/grid [root@oel8n2 ~]# chgrp -R oinstall /u01/app/21.0.0.0/grid [root@oel8n2 ~]#

Unzip GI

After, in the first node, you can unzip the GI installation (as the same user that runs your GI today). Look at the source and destination:

[grid@oel8n1 ~]$ unzip -qa /u01/install/Grid/V1011504-01.zip -d /u01/app/21.0.0.0/grid [grid@oel8n1 ~]$

runcluvy

The next step is executing runcluvy to check if all the requirements are meet to upgrade the GI. Check below the usage and attention to the parameters. The output is cropped but you can check the full output at this link:

[grid@oel8n1 grid]$ ./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /u01/app/19.0.0.0/grid -dest_crshome /u01/app/21.0.0.0/grid -dest_version 21.0.0.0.0 -fixup -verbose

Performing following verification checks ...

Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oel8n2 11.6928GB (1.2260776E7KB) 8GB (8388608.0KB) passed

oel8n1 11.6928GB (1.2260776E7KB) 8GB (8388608.0KB) passed

Physical Memory ...PASSED

...

...

...

DefaultTasksMax parameter ...PASSED

zeroconf check ...PASSED

ASM Filter Driver configuration ...PASSED

Systemd login manager IPC parameter ...PASSED

Pre-check for cluster services setup was unsuccessful.

Checks did not pass for the following nodes:

oel8n2,oel8n1

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Swap Size ...FAILED

oel8n2: PRVF-7573 : Sufficient swap size is not available on node "oel8n2"

[Required = 11.6928GB (1.2260776E7KB) ; Found = 3.9648GB (4157436.0KB)]

oel8n1: PRVF-7573 : Sufficient swap size is not available on node "oel8n1"

[Required = 11.6928GB (1.2260776E7KB) ; Found = 3.9648GB (4157436.0KB)]

CRS Integrity ...FAILED

that default ASM disk discovery string is in use ...FAILED

RPM Package Manager database ...INFORMATION

PRVG-11250 : The check "RPM Package Manager database" was not performed because

it needs 'root' user privileges.

Refer to My Oracle Support notes "2548970.1" for more details regarding errors

PRVG-11250".

CVU operation performed: stage -pre crsinst

Date: Aug 15, 2021 9:44:11 AM

Clusterware version: 19.0.0.0.0

CVU home: /u01/app/21.0.0.0/grid

Grid home: /u01/app/19.0.0.0/grid

User: grid

Operating system: Linux5.4.17-2102.201.3.el8uek.x86_64

[grid@oel8n1 grid]$

Above you can see that I got 3 errors: swap, rpm database, and ASM disk string (asm_diskstring). About the last one, the explanation (according to the doc) is:

The default value of ASM_DISKSTRING might not find all disks in all situations. In addition, if your installation uses multipathing software, then the software might place pseudo-devices in a path that is different from the operating system default.

But in my case everything is correct (I am using AFD and the correct way to set is using dsset) and I can continue:

[grid@oel8n1 grid]$ asmcmd ASMCMD> dsget parameter: profile:/dev/sd*,AFD:*,AFD:* ASMCMD> [grid@oel8n1 grid]$

If you face any/whatever error, please verify and fix it before continuing.

gridSetup.sh

Since 12.1 is possible to apply patches before calling the installation. In this case (since 21c was released a few days ago), there is no RU or RUR to be applied. If some exist (while you are upgrading and following here) you can call gridSetup with -applyRU or -applyRUR parameter. One example you can find in my previous post.

Is needed to unset the ORACLE_HOME, ORACLE_BASE, and ORACLE_SID before calling the gridSetup.sh

[grid@oel8n1 ~]$ unset ORACLE_HOME [grid@oel8n1 ~]$ unset ORACLE_BASE [grid@oel8n1 ~]$ unset ORACLE_SID [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ cd /u01/app/21.0.0.0/grid/ [grid@oel8n1 grid]$ [grid@oel8n1 grid]$ [grid@oel8n1 grid]$ ./gridSetup.sh ERROR: Unable to verify the graphical display setup. This application requires X display. Make sure that xdpyinfo exist under PATH variable. Launching Oracle Grid Infrastructure Setup Wizard...

And after we have:

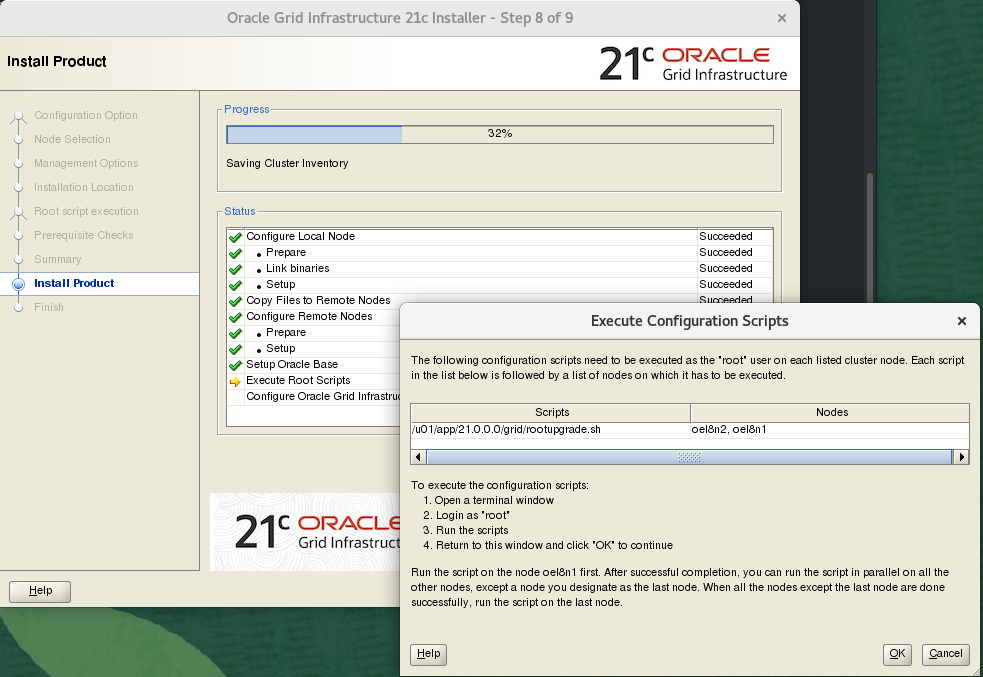

And the next steps are basically NextNextFinish until the moment that we need to execute the rootupgrade.sh in each node. Check the gallery below:

And the rootupgrade request for both nodes:

Before we execute the rootupgrade.sh we need to: relocate databases/services to the second node, or stop all databases. If you relocate, your databases will continue to run and you will have minimal downtime. Here I stopped my databases

[root@oel8n1 ~]# su - oracle [oracle@oel8n1 ~]$ export ORACLE_HOME=/u01/app/oracle/product/19.0.0.0/dbhome_1 [oracle@oel8n1 ~]$ export PATH=$ORACLE_HOME/bin:$PATH [oracle@oel8n1 ~]$ srvctl status database -d cdb19t Instance cdb19t1 is running on node oel8n1 Instance cdb19t2 is running on node oel8n2 [oracle@oel8n1 ~]$ [oracle@oel8n1 ~]$ srvctl stop database -d cdb19t -o immediate [oracle@oel8n1 ~]$ [oracle@oel8n1 ~]$ exit logout [root@oel8n1 ~]#

And now we can call the rootupgrade.sh in each node (one per time).

[root@oel8n1 ~]# /u01/app/21.0.0.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/21.0.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying oraenv to /usr/local/bin ...

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying coraenv to /usr/local/bin ...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/21.0.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/oel8n1/crsconfig/rootcrs_oel8n1_2021-08-15_10-39-20AM.log

2021/08/15 10:39:46 CLSRSC-595: Executing upgrade step 1 of 18: 'UpgradeTFA'.

2021/08/15 10:39:46 CLSRSC-4015: Performing install or upgrade action for Oracle Autonomous Health Framework (AHF).

2021/08/15 10:39:46 CLSRSC-595: Executing upgrade step 2 of 18: 'ValidateEnv'.

2021/08/15 10:39:52 CLSRSC-4005: Failed to patch Oracle Autonomous Health Framework (AHF). Grid Infrastructure operations will continue.

2021/08/15 10:39:53 CLSRSC-595: Executing upgrade step 3 of 18: 'GetOldConfig'.

2021/08/15 10:39:54 CLSRSC-464: Starting retrieval of the cluster configuration data

2021/08/15 10:40:09 CLSRSC-692: Checking whether CRS entities are ready for upgrade. This operation may take a few minutes.

2021/08/15 10:41:10 CLSRSC-693: CRS entities validation completed successfully.

2021/08/15 10:41:22 CLSRSC-515: Starting OCR manual backup.

2021/08/15 10:41:37 CLSRSC-516: OCR manual backup successful.

2021/08/15 10:41:48 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed.

2021/08/15 10:41:48 CLSRSC-595: Executing upgrade step 4 of 18: 'GenSiteGUIDs'.

2021/08/15 10:41:48 CLSRSC-595: Executing upgrade step 5 of 18: 'UpgPrechecks'.

2021/08/15 10:42:07 CLSRSC-595: Executing upgrade step 6 of 18: 'SetupOSD'.

2021/08/15 10:42:08 CLSRSC-595: Executing upgrade step 7 of 18: 'PreUpgrade'.

2021/08/15 10:42:50 CLSRSC-486:

At this stage of upgrade, the OCR has changed.

Any attempt to downgrade the cluster after this point will require a complete cluster outage to restore the OCR.

2021/08/15 10:42:50 CLSRSC-541:

To downgrade the cluster:

1. All nodes that have been upgraded must be downgraded.

2021/08/15 10:42:51 CLSRSC-542:

2. Before downgrading the last node, the Grid Infrastructure stack on all other cluster nodes must be down.

2021/08/15 10:42:51 CLSRSC-468: Setting Oracle Clusterware and ASM to rolling migration mode

2021/08/15 10:42:52 CLSRSC-482: Running command: '/u01/app/19.0.0.0/grid/bin/crsctl start rollingupgrade 21.0.0.0.0'

CRS-1131: The cluster was successfully set to rolling upgrade mode.

2021/08/15 10:42:58 CLSRSC-482: Running command: '/u01/app/21.0.0.0/grid/bin/asmca -silent -upgradeNodeASM -nonRolling false -oldCRSHome /u01/app/19.0.0.0/grid -oldCRSVersion 19.0.0.0.0 -firstNode true -startRolling false '

2021/08/15 10:43:04 CLSRSC-469: Successfully set Oracle Clusterware and ASM to rolling migration mode

2021/08/15 10:43:10 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack

2021/08/15 10:43:50 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed.

2021/08/15 10:43:55 CLSRSC-595: Executing upgrade step 8 of 18: 'CheckCRSConfig'.

2021/08/15 10:43:57 CLSRSC-595: Executing upgrade step 9 of 18: 'UpgradeOLR'.

2021/08/15 10:44:16 CLSRSC-595: Executing upgrade step 10 of 18: 'ConfigCHMOS'.

2021/08/15 10:44:16 CLSRSC-595: Executing upgrade step 11 of 18: 'UpgradeAFD'.

2021/08/15 10:45:38 CLSRSC-595: Executing upgrade step 12 of 18: 'createOHASD'.

2021/08/15 10:45:51 CLSRSC-595: Executing upgrade step 13 of 18: 'ConfigOHASD'.

2021/08/15 10:45:52 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

2021/08/15 10:46:46 CLSRSC-595: Executing upgrade step 14 of 18: 'InstallACFS'.

2021/08/15 10:47:22 CLSRSC-595: Executing upgrade step 15 of 18: 'InstallKA'.

2021/08/15 10:47:31 CLSRSC-595: Executing upgrade step 16 of 18: 'UpgradeCluster'.

2021/08/15 10:48:43 CLSRSC-343: Successfully started Oracle Clusterware stack

clscfg: EXISTING configuration version 19 detected.

Successfully taken the backup of node specific configuration in OCR.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2021/08/15 10:49:07 CLSRSC-595: Executing upgrade step 17 of 18: 'UpgradeNode'.

2021/08/15 10:49:12 CLSRSC-474: Initiating upgrade of resource types

2021/08/15 10:49:19 CLSRSC-475: Upgrade of resource types successfully initiated.

2021/08/15 10:49:25 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'.

2021/08/15 10:49:29 CLSRSC-474: Initiating upgrade of resource types

2021/08/15 10:49:34 CLSRSC-475: Upgrade of resource types successfully initiated.

2021/08/15 10:49:40 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@oel8n1 ~]#

And at the second node (remember to relocate your database to the first node if you relocate it before):

[root@oel8n2 ~]# /u01/app/21.0.0.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/21.0.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying oraenv to /usr/local/bin ...

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying coraenv to /usr/local/bin ...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/21.0.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/oel8n2/crsconfig/rootcrs_oel8n2_2021-08-15_10-50-57AM.log

2021/08/15 10:51:12 CLSRSC-595: Executing upgrade step 1 of 18: 'UpgradeTFA'.

2021/08/15 10:51:12 CLSRSC-4015: Performing install or upgrade action for Oracle Autonomous Health Framework (AHF).

2021/08/15 10:51:12 CLSRSC-595: Executing upgrade step 2 of 18: 'ValidateEnv'.

2021/08/15 10:51:13 CLSRSC-595: Executing upgrade step 3 of 18: 'GetOldConfig'.

2021/08/15 10:51:13 CLSRSC-464: Starting retrieval of the cluster configuration data

2021/08/15 10:51:18 CLSRSC-4005: Failed to patch Oracle Autonomous Health Framework (AHF). Grid Infrastructure operations will continue.

2021/08/15 10:51:26 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed.

2021/08/15 10:51:26 CLSRSC-595: Executing upgrade step 4 of 18: 'GenSiteGUIDs'.

2021/08/15 10:51:26 CLSRSC-595: Executing upgrade step 5 of 18: 'UpgPrechecks'.

2021/08/15 10:51:29 CLSRSC-595: Executing upgrade step 6 of 18: 'SetupOSD'.

2021/08/15 10:51:29 CLSRSC-595: Executing upgrade step 7 of 18: 'PreUpgrade'.

2021/08/15 10:51:38 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack

2021/08/15 10:52:13 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed.

2021/08/15 10:52:16 CLSRSC-595: Executing upgrade step 8 of 18: 'CheckCRSConfig'.

2021/08/15 10:52:19 CLSRSC-595: Executing upgrade step 9 of 18: 'UpgradeOLR'.

2021/08/15 10:52:27 CLSRSC-595: Executing upgrade step 10 of 18: 'ConfigCHMOS'.

2021/08/15 10:52:27 CLSRSC-595: Executing upgrade step 11 of 18: 'UpgradeAFD'.

2021/08/15 10:53:33 CLSRSC-595: Executing upgrade step 12 of 18: 'createOHASD'.

2021/08/15 10:53:37 CLSRSC-595: Executing upgrade step 13 of 18: 'ConfigOHASD'.

2021/08/15 10:53:37 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

2021/08/15 10:54:18 CLSRSC-595: Executing upgrade step 14 of 18: 'InstallACFS'.

2021/08/15 10:55:17 CLSRSC-595: Executing upgrade step 15 of 18: 'InstallKA'.

2021/08/15 10:55:20 CLSRSC-595: Executing upgrade step 16 of 18: 'UpgradeCluster'.

2021/08/15 10:56:47 CLSRSC-343: Successfully started Oracle Clusterware stack

clscfg: EXISTING configuration version 21 detected.

Successfully taken the backup of node specific configuration in OCR.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2021/08/15 10:57:06 CLSRSC-595: Executing upgrade step 17 of 18: 'UpgradeNode'.

Start upgrade invoked..

2021/08/15 10:57:14 CLSRSC-478: Setting Oracle Clusterware active version on the last node to be upgraded

2021/08/15 10:57:14 CLSRSC-482: Running command: '/u01/app/21.0.0.0/grid/bin/crsctl set crs activeversion'

Started to upgrade the active version of Oracle Clusterware. This operation may take a few minutes.

Started to upgrade CSS.

CSS was successfully upgraded.

Started to upgrade Oracle ASM.

Started to upgrade CRS.

CRS was successfully upgraded.

Started to upgrade Oracle ACFS.

Oracle ACFS was successfully upgraded.

Successfully upgraded the active version of Oracle Clusterware.

Oracle Clusterware active version was successfully set to 21.0.0.0.0.

2021/08/15 10:58:24 CLSRSC-479: Successfully set Oracle Clusterware active version

2021/08/15 10:58:26 CLSRSC-476: Finishing upgrade of resource types

2021/08/15 10:58:27 CLSRSC-477: Successfully completed upgrade of resource types

2021/08/15 10:59:41 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'.

Successfully updated XAG resources.

2021/08/15 11:00:02 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@oel8n2 ~]#

As you can see, both reported warnings due to the AHF. This occurred due to the unzip mismatch (since the unzip for Linux does not handle well the file – you can unzip at windows and move the installation to Linux to be executed later):

> Upgrading /opt/oracle.ahf > error [/tmp/.ahf.60044/ahf_install.60044.zip]: missing 1519908 bytes in zipfile > (attempting to process anyway) > error [/tmp/.ahf.60044/ahf_install.60044.zip]: start of central directory not found; > zipfile corrupt. > (please check that you have transferred or created the zipfile in the > appropriate BINARY mode and that you have compiled UnZip properly) > /u01/app/21.0.0.0/grid/crs/install/ahf_setup: line 2781: /opt/oracle.ahf/bin/tfactl: No such file or directory > /u01/app/21.0.0.0/grid/crs/install/ahf_setup: line 2785: /opt/oracle.ahf/bin/tfactl: No such file or directory

You can follow my post about how to upgrade AHF to properly upgrade. The log whole logfile for rootupgrade.sh can I uploaded it for node01 and node02.

If you had errors, please open SR to investigate the issue with MOS.

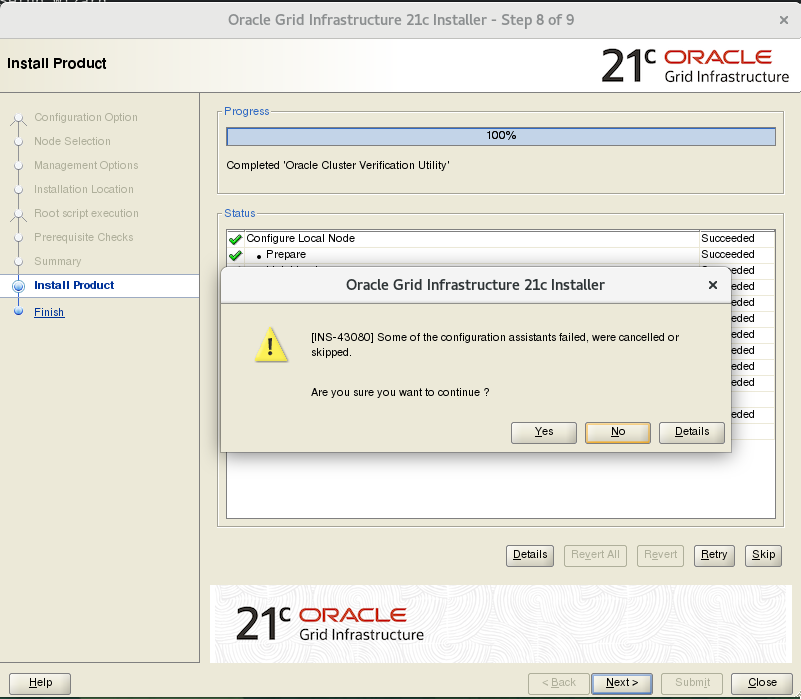

So, the upgrade is finished (the most critical part) and we can continue the process:

As you can see, the end of the process runs again the runcluvy and the same error is reported. If you got errors, please review them before continuing. But we can continue it since they are the same as reported before:

Post Upgrade

We have some small activities to do after the upgrade. Most are related to the user variables and remove old GI.

First is start databases that we stopped:

[root@oel8n1 ~]# su - oracle [oracle@oel8n1 ~]$ export ORACLE_HOME=/u01/app/oracle/product/19.0.0.0/dbhome_1 [oracle@oel8n1 ~]$ export PATH=$ORACLE_HOME/bin:$PATH [oracle@oel8n1 ~]$ srvctl start database -d cdb19t [oracle@oel8n1 ~]$ [oracle@oel8n1 ~]$ exit logout [root@oel8n1 ~]#

Fix the environment of the user that runs GI (grid in my environment):

[root@oel8n1 ~]# su - grid

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

ORACLE_HOME=/u01/app/19.0.0.0/grid

ORACLE_BASE=/u01/app/grid

ORACLE_SID=+ASM1

PATH=$ORACLE_HOME/bin:$PATH

umask 022

export ORACLE_HOME

export ORACLE_BASE

export ORACLE_SID

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$ vi .bash_profile

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

ORACLE_HOME=/u01/app/21.0.0.0/grid

ORACLE_BASE=/u01/app/grid

ORACLE_SID=+ASM1

PATH=$ORACLE_HOME/bin:$PATH

umask 022

export ORACLE_HOME

export ORACLE_BASE

export ORACLE_SID

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$

####################################

#

# Second NODE

#

####################################

[root@oel8n2 ~]# su - grid

[grid@oel8n2 ~]$

[grid@oel8n2 ~]$

[grid@oel8n2 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

ORACLE_HOME=/u01/app/19.0.0.0/grid

ORACLE_BASE=/u01/app/grid

ORACLE_SID=+ASM2

PATH=$ORACLE_HOME/bin:$PATH

umask 022

export ORACLE_HOME

export ORACLE_BASE

export ORACLE_SID

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH

[grid@oel8n2 ~]$

[grid@oel8n2 ~]$ vi .bash_profile

[grid@oel8n2 ~]$

[grid@oel8n2 ~]$

[grid@oel8n2 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

ORACLE_HOME=/u01/app/21.0.0.0/grid

ORACLE_BASE=/u01/app/grid

ORACLE_SID=+ASM2

PATH=$ORACLE_HOME/bin:$PATH

umask 022

export ORACLE_HOME

export ORACLE_BASE

export ORACLE_SID

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH

[grid@oel8n2 ~]$

Remove old GI from oraInventory (commands executed as grid user):

[root@oel8n1 ~]# su - grid [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml |grep grid <HOME NAME="OraGI19Home1" LOC="/u01/app/19.0.0.0/grid" TYPE="O" IDX="1" CRS="true"> <HOME NAME="OraGI21Home1" LOC="/u01/app/21.0.0.0/grid" TYPE="O" IDX="3" CRS="true"/> [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ unset ORACLE_HOME [grid@oel8n1 ~]$ export ORACLE_HOME=/u01/app/19.0.0.0/grid [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ $ORACLE_HOME/oui/bin/runInstaller -detachHome -silent ORACLE_HOME=/u01/app/19.0.0.0/grid Starting Oracle Universal Installer... Checking swap space: must be greater than 500 MB. Actual 4046 MB Passed The inventory pointer is located at /etc/oraInst.loc You can find the log of this install session at: /u01/app/oraInventory/logs/DetachHome2021-08-15_11-58-00AM.log 'DetachHome' was successful. [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ [grid@oel8n1 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml |grep grid <HOME NAME="OraGI21Home1" LOC="/u01/app/21.0.0.0/grid" TYPE="O" IDX="3" CRS="true"/> <HOME NAME="OraGI19Home1" LOC="/u01/app/19.0.0.0/grid" TYPE="O" IDX="1" REMOVED="T"/> [grid@oel8n1 ~]$

As you can see, at second node is fine, and later do delete for the old GI for both nodes:

[grid@oel8n2 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml |grep grid <HOME NAME="OraGI21Home1" LOC="/u01/app/21.0.0.0/grid" TYPE="O" IDX="3" CRS="true"/> <HOME NAME="OraGI19Home1" LOC="/u01/app/19.0.0.0/grid" TYPE="O" IDX="1" REMOVED="T"/> [grid@oel8n2 ~]$

Since is an upgrade process, we need to adjust the oraInventory to inform that GI has nodes.

[root@oel8n1 ~]# su - grid

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2021, Oracle and/or its affiliates.

All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>12.2.0.7.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="dbhome_19_12" LOC="/u01/app/oracle/product/19.0.0.0/dbhome_1" TYPE="O" IDX="2">

<NODE_LIST>

<NODE NAME="oel8n1"/>

<NODE NAME="oel8n2"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraGI21Home1" LOC="/u01/app/21.0.0.0/grid" TYPE="O" IDX="3" CRS="true"/>

<HOME NAME="OraGI19Home1" LOC="/u01/app/19.0.0.0/grid" TYPE="O" IDX="1" REMOVED="T"/>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

</INVENTORY>

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$ /u01/app/21.0.0.0/grid/oui/bin/runInstaller -nowait -waitforcompletion -ignoreSysPrereqs -updateNodeList ORACLE_HOME=/u01/app/21.0.0.0/grid "CLUSTER_NODES={oel8n1,oel8n2}" CRS=true LOCAL_NODE=oel8n1

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 4046 MB Passed

The inventory pointer is located at /etc/oraInst.loc

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$

[grid@oel8n1 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2021, Oracle and/or its affiliates.

All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>12.2.0.9.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="dbhome_19_12" LOC="/u01/app/oracle/product/19.0.0.0/dbhome_1" TYPE="O" IDX="2">

<NODE_LIST>

<NODE NAME="oel8n1"/>

<NODE NAME="oel8n2"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraGI21Home1" LOC="/u01/app/21.0.0.0/grid" TYPE="O" IDX="3" CRS="true">

<NODE_LIST>

<NODE NAME="oel8n1"/>

<NODE NAME="oel8n2"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraGI19Home1" LOC="/u01/app/19.0.0.0/grid" TYPE="O" IDX="1" REMOVED="T"/>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

</INVENTORY>

[grid@oel8n1 ~]$

Adjust the COMPATIBLE.ASM

To finish, we can adjust the COMPATIBLE.ASM to 21c. There is no information about this requirement at docs, not even the correct value. But as a guide, you can follow the table for validations directly from ASM doc. I set the value below to all diskgroups:

[grid@oel8n1 ~]$ sqlplus / as sysasm SQL*Plus: Release 21.0.0.0.0 - Production on Sun Aug 15 15:24:30 2021 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle. All rights reserved. Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Version 21.3.0.0.0 SQL> SELECT name as dgname, substr(compatibility,1,12) as asmcompat FROM V$ASM_DISKGROUP; DGNAME ASMCOMPAT ------------------------------ ------------ SYSTEMDG 19.0.0.0.0 RECO 19.0.0.0.0 DATA 19.0.0.0.0 SQL> ALTER DISKGROUP RECO SET ATTRIBUTE 'compatible.asm' = '21.0.0.0.0'; Diskgroup altered. SQL> ALTER DISKGROUP DATA SET ATTRIBUTE 'compatible.asm' = '21.0.0.0.0'; Diskgroup altered. SQL> ALTER DISKGROUP SYSTEMDG SET ATTRIBUTE 'compatible.asm' = '21.0.0.0.0'; Diskgroup altered. SQL> SELECT name as dgname, substr(compatibility,1,12) as asmcompat FROM V$ASM_DISKGROUP; DGNAME ASMCOMPAT ------------------------------ ------------ SYSTEMDG 21.0.0.0.0 RECO 21.0.0.0.0 DATA 21.0.0.0.0 SQL>

Upgrade GI for OEL 7.6

In this link, you can see the execution log for GI upgrade from 19.5 to 21c that runs using ASMLIB. The server in this case runs at OEL 7.6. As you can see in the attached file, no errors (besides AHF).

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”