On 08/March/2023 the Oracle Exadata team released version 23.1.0.0.0 and this include a significant change, OEL 8. I already explained that in my first post that you can read here. In my previous posts, I already described how to patch how to patch storage and switch, and the dom0. In this post, I will discuss how to patch the domU.

What you can do

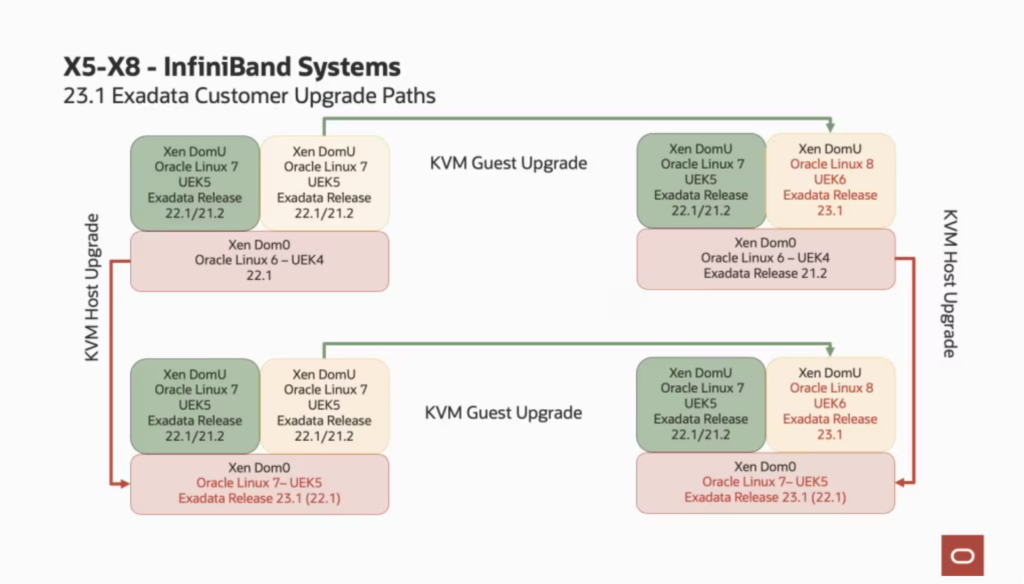

I already wrote this previously but is important to understand the upgrade paths that you can do: If you are running the old Exadata with InfiniBand, your dom0 will always be updated until Oracle Linux 7 with UEK5. For domU you can upgrade to the OEL 8. And you can upgrade in any order, first dom0 or domU. If you are running RoCE, your dom0 can run the latest OEL 8 UEK6. The blog post from Oracle made an excellent explanation about the upgrade paths and below you can see the images that are there (I used the image from their post).

So, here we are at Exadata with InfiniBand, I previously upgraded the dom0, and now the domU. The good part is that will be patched to OEL8, with UEK6 (image from Exadata Team post):

Patching domU

Different than dom0, the domU is the virtual part and you don’t need to check for HW errors or even restart the ILOM. But you need to check for compatibility for databases and GI. You REALLY need to meet the requirements described at MOS Note 2772585.1 and the Exadata Team Post. Again, if you are not running the required/compatible version of domU you can use this same post and patch your domU (just change the patch numbers). For the GI you can use my previous posts about how to do that 19c, and 21c. More details about these requirements I already described in my first post (I recommend you to read to understand better).

Upgrading from OEL7 to OEL 8 is quite a complex procedure and I recommend backup your environment. Backup your “boot partition”, “root and /” and everything that you need to restore in case of failure. And for domU (since is just a file copy), do an offline backup with the domU stopped (cp to somewhere at dom0), or an LVM snapshot in case of a BM machine. This can save your day.

You will need one machine from where you call the patch, and this needs ssh keyless/passwordless to do the patch at all domU. You can do it from one domU to another, or from “outsider” and patch all at the same time in no rolling mode. Whatever the mode, plan the downtime for domU’s.

Here I will patch on no rolling mode of the domU. So, all the db’s and clusterware will be offline. You can do this with a simple “crsctl stop cluster” (cropped below to reduce output):

[root@exadUvm02 ~]# /u01/app/19.0.0.0/grid/bin/crsctl stop cluster CRS-2673: Attempting to stop 'ora.crsd' on 'exadUvm02' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'exadUvm02' … CRS-2673: Attempting to stop 'ora.asm' on 'exadUvm02' CRS-2677: Stop of 'ora.ctssd' on 'exadUvm02' succeeded CRS-2677: Stop of 'ora.evmd' on 'exadUvm02' succeeded CRS-2677: Stop of 'ora.asm' on 'exadUvm02' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'exadUvm02' CRS-2677: Stop of 'ora.cssd' on 'exadUvm02' succeeded CRS-2679: Attempting to clean 'ora.diskmon' on 'exadUvm02' CRS-2681: Clean of 'ora.diskmon' on 'exadUvm02' succeeded [root@exadUvm02 ~]#

And since the domU/BM will restart sometimes during the process I recommend disabling the CRS as well to avoid any delay.

[root@exadUvm02 ~]# /u01/app/19.0.0.0/grid/bin/crsctl disable crs CRS-4621: Oracle High Availability Services autostart is disabled. [root@exadUvm02 ~]# [root@exadUvm02 ~]# [root@exadUvm02 ~]# /u01/app/19.0.0.0/grid/bin/crsctl stop crs CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'exadUvm02' CRS-2673: Attempting to stop 'ora.mdnsd' on 'exadUvm02' CRS-2673: Attempting to stop 'ora.crf' on 'exadUvm02' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'exadUvm02' CRS-2673: Attempting to stop 'ora.gpnpd' on 'exadUvm02' CRS-2677: Stop of 'ora.drivers.acfs' on 'exadUvm02' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'exadUvm02' succeeded CRS-2677: Stop of 'ora.gpnpd' on 'exadUvm02' succeeded CRS-2677: Stop of 'ora.crf' on 'exadUvm02' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'exadUvm02' CRS-2677: Stop of 'ora.gipcd' on 'exadUvm02' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'exadUvm02' has completed CRS-4133: Oracle High Availability Services has been stopped. [root@exadUvm02 ~]#

Stopping everything (at all nodes that will be patched) will speed up the process because you don’t need to wait for any additional service (like GI) to startup. An additional thing is to comment on any NFS filesystem from fstab. This is important because it uses the network and during the patch maybe the network startup will delay and then it will need to wait for a timeout for the mountpoints (and this can take a lot of time depending on what was configured).

Here the process is similar to dom0, we need to have two files: 1 – the patchmgr (from Patch 21634633 – Please read the note 1553103.1 if you have doubts), and 2 – the ISO/patch itself (Patch 32829545). This is important because the patch for 23.1 is just an ISO from the YUM channel, and it does not come with any patchmgr inside (like the switch/storage server patch).

So, we download and unzip patch 21634633:

[DOM0 - root@exadom0m01 ~]$ cd /EXAVMIMAGES/patches/ [DOM0 - root@exadom0m01 patches]$ [DOM0 - root@exadom0m01 patches]$ [DOM0 - root@exadom0m01 patches]$ ls -l patchmgr total 559108 drwxrwxr-x 5 root root 3896 Mar 10 17:23 dbserver_patch_230225 -rw-r--r-- 1 root root 562465570 Mar 9 10:11 p21634633_231000_Linux-x86-64.zip -rw-r--r-- 1 root root 9236973 Mar 10 17:23 screenlog.0 [DOM0 - root@exadom0m01 patches]$ [DOM0 - root@exadom0m01 patches]$ cd patchmgr/ [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ unzip -q p21634633_231000_Linux-x86-64.zip [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ ls -l total 559108 drwxrwxr-x 3 root root 3896 Feb 26 00:56 dbserver_patch_230225 -rw-r--r-- 1 root root 562465570 Mar 9 10:11 p21634633_231000_Linux-x86-64.zip -rw-r--r-- 1 root root 9236973 Mar 10 17:23 screenlog.0 [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ cd [DOM0 - root@exadom0m01 ~]$

After that, we can do the precheck for the server that we want to patch and the target version:

[DOM0 - root@exadom0m01 ~]$ cat /root/dbs_group_exwcl02 exadUvm01 exadUvm02 [DOM0 - root@exadom0m01 ~]$ [DOM0 - root@exadom0m01 ~]$ cd /EXAVMIMAGES/patches/patchmgr/ [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ ./dbserver_patch_230225/patchmgr -dbnodes /root/dbs_group_exwcl02 --precheck --iso_repo /EXAVMIMAGES/patches/23.1.0/domU/p32829545_231000_Linux-x86-64.zip --target_version 23.1.0.0.0.230225.1 --skip_gi_db_validation ************************************************************************************************************ NOTE patchmgr release: 23.230225 (always check MOS 1553103.1 for the latest release of dbserver.patch.zip) NOTE WARNING Do not interrupt the patchmgr session. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot database nodes during update or rollback. WARNING Do not open logfiles in write mode and do not try to alter them. ************************************************************************************************************ 2023-03-10 17:30:50 +0100 :INFO : Checking hosts connectivity via ICMP/ping 2023-03-10 17:30:51 +0100 :INFO : Hosts Reachable: [exadUvm01 exadUvm02] 2023-03-10 17:30:51 +0100 :INFO : All hosts are reachable via ping/ICMP 2023-03-10 17:30:51 +0100 :Working: Verify SSH equivalence for the root user to node(s) 2023-03-10 17:30:53 +0100 :INFO : SSH equivalency verified to host exadUvm01 2023-03-10 17:30:54 +0100 :INFO : SSH equivalency verified to host exadUvm02 2023-03-10 17:30:55 +0100 :SUCCESS: Verify SSH equivalence for the root user to node(s) 2023-03-10 17:30:59 +0100 :Working: Initiate precheck on 2 node(s) 2023-03-10 17:31:17 +0100 :Working: Check for enough free space on node(s) to transfer and unzip files. 2023-03-10 17:31:29 +0100 :SUCCESS: Check for enough free space on node(s) to transfer and unzip files. 2023-03-10 17:31:31 +0100 :INFO : Preparing nodes: exadUvm01 exadUvm02 2023-03-10 17:32:21 +0100 :Working: dbnodeupdate.sh running a precheck for the conventional update on node(s). 2023-03-10 17:36:03 +0100 :SUCCESS: Initiate precheck on node(s). 2023-03-10 17:36:05 +0100 :SUCCESS: Completed run of command: ./dbserver_patch_230225/patchmgr -dbnodes /root/dbs_group_exwcl02 --precheck --iso_repo /EXAVMIMAGES/patches/23.1.0/domU/p32829545_231000_Linux-x86-64.zip --target_version 23.1.0.0.0.230225.1 --skip_gi_db_validation 2023-03-10 17:36:05 +0100 :INFO : Precheck performed on dbnode(s) in file /root/dbs_group_exwcl02: [exadUvm01 exadUvm02] 2023-03-10 17:36:05 +0100 :INFO : Current image version on dbnode(s) is: 2023-03-10 17:36:05 +0100 :INFO : exadUvm01: 22.1.9.0.0.230302 2023-03-10 17:36:05 +0100 :INFO : exadUvm02: 22.1.9.0.0.230302 2023-03-10 17:36:05 +0100 :INFO : For details, check the following files in /EXAVMIMAGES/patches/patchmgr/dbserver_patch_230225: 2023-03-10 17:36:05 +0100 :INFO : - <dbnode_name>_dbnodeupdate.log 2023-03-10 17:36:05 +0100 :INFO : - patchmgr.log 2023-03-10 17:36:05 +0100 :INFO : - patchmgr.trc 2023-03-10 17:36:13 +0100 :INFO : Collected dbnodeupdate diag in file: Diag_patchmgr_dbnode_precheck_100323173037.tbz 2023-03-10 17:36:13 +0100 :INFO : Exit status:0 2023-03-10 17:36:13 +0100 :INFO : Exiting. [DOM0 - root@exadom0m01 patchmgr]$

Some details over the parameters, the server that will be patched is defined by the parameter “–dbnodes“, the action is the “–precheck“, and the ISO used to patch is defined by “–iso_repo”, and target version by “–target_version”. And since the GI is already patched with a compatible version, I defined the “–skip_gi_db_validation” to avoid the patchmgr check for the GI. And besides that, it is down and some checks can fail.

Above, you can see that everything is fine, and we can call the patch. Now calling the patchmgr with the “–upgrade” option:

[DOM0 - root@exadom0m01 patchmgr]$ pwd /EXAVMIMAGES/patches/patchmgr [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ screen -L -RR Patch-DBNODE [DOM0 - root@exadom0m01 patchmgr]$ [DOM0 - root@exadom0m01 patchmgr]$ ./dbserver_patch_230225/patchmgr -dbnodes /root/dbs_group_exwcl02 --upgrade --iso_repo /EXAVMIMAGES/patches/23.1.0/domU/p32829545_231000_Linux-x86-64.zip --target_version 23.1.0.0.0.230225.1 --skip_gi_db_validation ************************************************************************************************************ NOTE patchmgr release: 23.230225 (always check MOS 1553103.1 for the latest release of dbserver.patch.zip) NOTE NOTE Database nodes will reboot during the update process. NOTE WARNING Do not interrupt the patchmgr session. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot database nodes during update or rollback. WARNING Do not open logfiles in write mode and do not try to alter them. ************************************************************************************************************ 2023-03-10 17:41:36 +0100 :INFO : Checking hosts connectivity via ICMP/ping 2023-03-10 17:41:37 +0100 :INFO : Hosts Reachable: [exadUvm01 exadUvm02] 2023-03-10 17:41:37 +0100 :INFO : All hosts are reachable via ping/ICMP 2023-03-10 17:41:38 +0100 :Working: Verify SSH equivalence for the root user to node(s) 2023-03-10 17:41:39 +0100 :INFO : SSH equivalency verified to host exadUvm01 2023-03-10 17:41:41 +0100 :INFO : SSH equivalency verified to host exadUvm02 2023-03-10 17:41:41 +0100 :SUCCESS: Verify SSH equivalence for the root user to node(s) 2023-03-10 17:41:46 +0100 :Working: Initiate prepare steps on node(s). 2023-03-10 17:41:48 +0100 :Working: Check for enough free space on node(s) to transfer and unzip files. 2023-03-10 17:42:00 +0100 :SUCCESS: Check for enough free space on node(s) to transfer and unzip files. 2023-03-10 17:42:02 +0100 :INFO : Preparing nodes: exadUvm01 exadUvm02 2023-03-10 17:42:54 +0100 :SUCCESS: Initiate prepare steps on node(s). 2023-03-10 17:42:54 +0100 :Working: Initiate update on 2 node(s). 2023-03-10 17:42:55 +0100 :Working: dbnodeupdate.sh running a backup on 2 node(s). 2023-03-10 17:50:49 +0100 :SUCCESS: dbnodeupdate.sh running a backup on 2 node(s). 2023-03-10 17:50:49 +0100 :Working: Initiate update on node(s) 2023-03-10 17:50:49 +0100 :Working: Get information about any required OS upgrades from exadUvm01. 2023-03-10 17:50:49 +0100 :Working: Get information about any required OS upgrades from exadUvm02. 2023-03-10 17:51:00 +0100 :SUCCESS: Get information about any required OS upgrades from exadUvm01. 2023-03-10 17:51:00 +0100 :SUCCESS: Get information about any required OS upgrades from exadUvm02. 2023-03-10 17:51:01 +0100 :Working: dbnodeupdate.sh running an update step on exadUvm01. 2023-03-10 17:51:02 +0100 :Working: dbnodeupdate.sh running an update step on exadUvm02. 2023-03-10 18:01:38 +0100 :INFO : exadUvm02 is ready to reboot. 2023-03-10 18:01:38 +0100 :SUCCESS: dbnodeupdate.sh running an update step on exadUvm02. 2023-03-10 18:01:51 +0100 :INFO : exadUvm01 is ready to reboot. 2023-03-10 18:01:51 +0100 :SUCCESS: dbnodeupdate.sh running an update step on exadUvm01. 2023-03-10 18:02:13 +0100 :Working: Initiate reboot on exadUvm02. 2023-03-10 18:02:19 +0100 :SUCCESS: Initiate reboot on exadUvm02. 2023-03-10 18:02:20 +0100 :Working: Waiting to ensure exadUvm02 is down before reboot. 2023-03-10 18:02:26 +0100 :Working: Initiate reboot on exadUvm01. 2023-03-10 18:02:33 +0100 :SUCCESS: Initiate reboot on exadUvm01. 2023-03-10 18:02:33 +0100 :Working: Waiting to ensure exadUvm01 is down before reboot. 2023-03-10 18:03:46 +0100 :SUCCESS: Waiting to ensure exadUvm02 is down before reboot. 2023-03-10 18:03:46 +0100 :Working: Waiting to ensure exadUvm02 is up after reboot. 2023-03-10 18:03:59 +0100 :SUCCESS: Waiting to ensure exadUvm01 is down before reboot. 2023-03-10 18:03:59 +0100 :Working: Waiting to ensure exadUvm01 is up after reboot. 2023-03-10 18:17:21 +0100 :SUCCESS: Waiting to ensure exadUvm02 is up after reboot. 2023-03-10 18:17:21 +0100 :Working: Waiting to connect to exadUvm02 with SSH. During Linux upgrades this can take some time. 2023-03-10 18:17:24 +0100 :SUCCESS: Waiting to connect to exadUvm02 with SSH. During Linux upgrades this can take some time. 2023-03-10 18:17:24 +0100 :Working: Wait for exadUvm02 is ready for the completion step of update. 2023-03-10 18:17:25 +0100 :SUCCESS: Wait for exadUvm02 is ready for the completion step of update. 2023-03-10 18:17:26 +0100 :Working: Initiate completion step from dbnodeupdate.sh on exadUvm02 2023-03-10 18:18:11 +0100 :SUCCESS: Waiting to ensure exadUvm01 is up after reboot. 2023-03-10 18:18:11 +0100 :Working: Waiting to connect to exadUvm01 with SSH. During Linux upgrades this can take some time. 2023-03-10 18:18:14 +0100 :SUCCESS: Waiting to connect to exadUvm01 with SSH. During Linux upgrades this can take some time. 2023-03-10 18:18:14 +0100 :Working: Wait for exadUvm01 is ready for the completion step of update. 2023-03-10 18:18:15 +0100 :SUCCESS: Wait for exadUvm01 is ready for the completion step of update. 2023-03-10 18:18:16 +0100 :Working: Initiate completion step from dbnodeupdate.sh on exadUvm01 2023-03-10 18:22:18 +0100 :SUCCESS: Initiate completion step from dbnodeupdate.sh on exadUvm02. 2023-03-10 18:23:12 +0100 :SUCCESS: Initiate completion step from dbnodeupdate.sh on exadUvm01. 2023-03-10 18:24:28 +0100 :SUCCESS: Initiate update on node(s). 2023-03-10 18:24:28 +0100 :SUCCESS: Initiate update on all node(s) 2023-03-10 18:24:28 +0100 :SUCCESS: Initiate update on 2 node(s). 2023-03-10 18:24:30 +0100 :SUCCESS: Completed run of command: ./dbserver_patch_230225/patchmgr -dbnodes /root/dbs_group_exwcl02 --upgrade --iso_repo /EXAVMIMAGES/patches/23.1.0/domU/p32829545_231000_Linux-x86-64.zip --target_version 23.1.0.0.0.230225.1 --skip_gi_db_validation 2023-03-10 18:24:30 +0100 :INFO : Upgrade performed on dbnode(s) in file /root/dbs_group_exwcl02: [exadUvm01 exadUvm02] 2023-03-10 18:24:30 +0100 :INFO : Current image version on dbnode(s) is: 2023-03-10 18:24:31 +0100 :INFO : exadUvm01: 23.1.0.0.0.230225.1 2023-03-10 18:24:31 +0100 :INFO : exadUvm02: 23.1.0.0.0.230225.1 2023-03-10 18:24:31 +0100 :INFO : For details, check the following files in /EXAVMIMAGES/patches/patchmgr/dbserver_patch_230225: 2023-03-10 18:24:31 +0100 :INFO : - <dbnode_name>_dbnodeupdate.log 2023-03-10 18:24:31 +0100 :INFO : - patchmgr.log 2023-03-10 18:24:31 +0100 :INFO : - patchmgr.trc 2023-03-10 18:24:59 +0100 :INFO : Collected dbnodeupdate diag in file: Diag_patchmgr_dbnode_upgrade_100323174122.tbz 2023-03-10 18:25:00 +0100 :INFO : Exit status:0 2023-03-10 18:25:00 +0100 :INFO : Exiting. [DOM0 - root@exadom0m01 patchmgr]$

As you can see, the patch was fine and was executed on all servers at the same time. So, in no rolling mode (the default), the patch was faster and finished in less than one hour. The patch is “fast” because the domU doesn’t need to patch any HW and you just copy the new files and point the boot.

After that, we can connect at domU’s and start the GI:

[root@exadUvm01 ~]# /u01/app/19.0.0.0/grid/bin/crsctl enable crs CRS-4622: Oracle High Availability Services autostart is enabled. [root@exadUvm01 ~]# [root@exadUvm01 ~]# /u01/app/19.0.0.0/grid/bin/crsctl start crs CRS-4123: Oracle High Availability Services has been started. [root@exadUvm01 ~]#

Since everything is fine we can call the cleanup at patchmgr (“–cleanup”). And at the end you will have something like this:

[DOM0 - root@exadUvm01 patchmgr]$ dcli -l root -g /root/dbs_group_exwcl02 "cat /etc/redhat-release" exadUvm01: Red Hat Enterprise Linux release 8.7 (Ootpa) exadUvm02: Red Hat Enterprise Linux release 8.7 (Ootpa) [DOM0 - root@exadUvm01 patchmgr]$ [DOM0 - root@exadUvm01 patchmgr]$ dcli -l root -g /root/dbs_group_exwcl02 "imageinfo" exadUvm01: exadUvm01: Kernel version: 5.4.17-2136.315.5.8.el8uek.x86_64 #2 SMP Fri Feb 24 14:56:37 PST 2023 x86_64 exadUvm01: Image kernel version: 5.4.17-2136.315.5.8.el8uek exadUvm01: Image version: 23.1.0.0.0.230225.1 exadUvm01: Image activated: 2023-03-10 18:18:55 +0100 exadUvm01: Image status: success exadUvm01: Exadata software version: 23.1.0.0.0.230225.1 exadUvm01: Node type: GUEST exadUvm01: System partition on device: /dev/mapper/VGExaDb-LVDbSys1 exadUvm01: exadUvm02: exadUvm02: Kernel version: 5.4.17-2136.315.5.8.el8uek.x86_64 #2 SMP Fri Feb 24 14:56:37 PST 2023 x86_64 exadUvm02: Image kernel version: 5.4.17-2136.315.5.8.el8uek exadUvm02: Image version: 23.1.0.0.0.230225.1 exadUvm02: Image activated: 2023-03-10 18:18:06 +0100 exadUvm02: Image status: success exadUvm02: Exadata software version: 23.1.0.0.0.230225.1 exadUvm02: Node type: GUEST exadUvm02: System partition on device: /dev/mapper/VGExaDb-LVDbSys1 exadUvm02: [DOM0 - root@exadUvm01 patchmgr]$ [DOM0 - root@exadUvm01 patchmgr]$ dcli -l root -g /root/dbs_group_exwcl02 "imageinfo" |grep "Image version" exadUvm01: Image version: 23.1.0.0.0.230225.1 exadUvm02: Image version: 23.1.0.0.0.230225.1 [DOM0 - root@exadUvm01 patchmgr]$

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purposes, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”