In my previous post, I talked about why use the new parameters DB_FLASHBACK_LOG_DEST_SIZE and DB_FLASHBACK_LOG_DEST for Oracle 23ai. I spoke about how to configure them and the benefits. Here you will find additional details about these two parameters and what they change for internal views and the restore points.

Tag Archives: Database

23ai, new parameters DB_FLASHBACK_LOG_DEST_SIZE and DB_FLASHBACK_LOG_DEST

Oracle database has the Oracle Flashback Technology that allows you to view old images of your data without the need to restore your database. You can use restore points, restore tables, and rows, and do a lot of things. To use it (in a simple way), you need to enable the archivelog and flashback mode for your database and Oracle will create additional logs while you change the data.

Unfortunately, it is exactly these logs that create some issues. Jonathan Lewis already described this issue, and in resume, while changing the data you need to write more because you will use UNDO + Flashback logs. In essence, you write more every time.

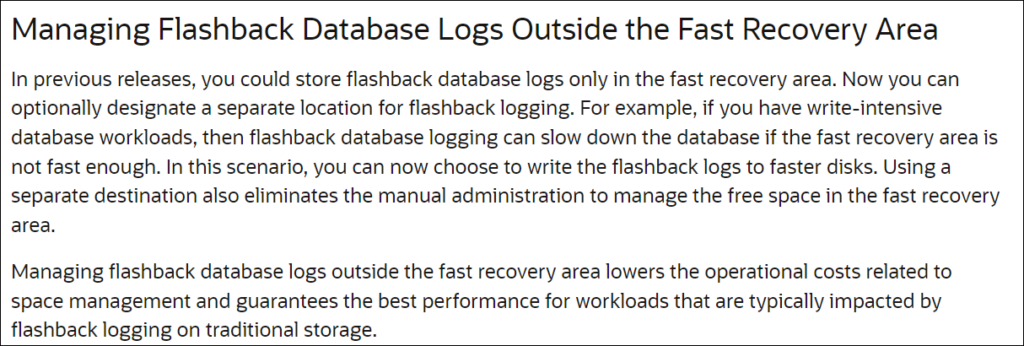

Until Oracle 23ai, it was not possible to change the place where you write these logs, (more or less) it will always be where you write your archivelogs (when using the fast recovery area). So, archivelogs and flashback logs are tight where they reside. Luckily this changed, and the new features of 23ai explain:

The idea is to put the flashback logs in a dedicated (and fast) disk to reduce the impact of writing them.

21c, DG PDB, New Steps

When the DGPDB was released for 21c (at version 21.7) I wrote a blog post about how to use the feature (you can read it here). This was in August of 2022 and since that time, we got small changes and corrections, but with the update 21.12 (patch 35740258) we got new commands like “EDIT CONFIGURATION PREPARE DGPDB”.

Not just that, but Ludovico Caldara (Data Guard PM) recently wrote one blog post about new commands for Data Guard preparation that can be used with Broker. Is an evolution of the commands I covered in one previous blog post.

So, in this post, I will cover the new commands for DG PDB and the changes/improvements that appeared in the last version. It is a long post, but everything is covered here. No gaps or information are missing, all the steps, logs, and outputs are described and documented.

Friends, Conferences, and Community

Last September was pretty special for me because had some opportunity to meet friends again after COVID/Pandemic situation.

POUG

First, POUG is POUG. There is no way to describe what it is if you were never there. I had the opportunity to be there talking about ExaCC. The whole conference is amazing, not just because of the technical content (that is surreal), but also because of the friends that were/are there. Everyone that was there was enjoying the conference, but most important, enjoying being there with friends.

For POUG I need to say thank you to Kamil Stawiarski (https://twitter.com/ora600pl) and Luiza Nowak (https://www.ora-600.pl/en/tp/luiza-koziel-2/) for organizing the event. You, together with the whole POUG team, made one fantastic conference.

21c, DG PDB

Since the 21c was public available the Data Guard per Pluggable Database – DG PDB – was intended to be there, but Oracle needed more time to make things work and some weeks ago released the feature with the 21.7 version. Here in this post, I will show to configure it and also how to troubleshoot, and the pitfalls of using it. As usual, all the steps, logs, and outputs are covered here and I hope that it helps you understand the whole DG PDB process.

My environment

The environment that I am using here is:

- Two databases running in RAC mode (two nodes in each cluster).

- ASM: same DATA and RECO diskgroups names in each cluster.

About the databases I have:

- ORADBDC1, that have the pdb PDBDC1. So, they represent the DC1.

- ORADBDC2, that have the pdb PDBDC2. So, they represent the DC2.

Each of these clusters is in a separate environment, this means that both are primary databases inside each datacenter. So, they have no DG configured between them.

The main target for this post is to have the pdb from DC2 protected by the ORADBDC1 at the DC1. I used RAC and ASM because this is usually the normal configuration for the MAA (following the recommended architectures baseline) when using DG. This increases the protection and reduces the SPOF of your environment.

DG PDB

The idea of DG PDB differs a little from what we see commonly for Data Guard, here each container have own life. This means that only the pdb is protected and not the entire cdb. This puts the DG PDB close to Cloud than On-Prem because it fit perfectly at the OCI structure since you can create your pdb in one region and choose another region to protect it. And even closer if you think for Autonomous Database that your ownership is pdb only. I will not say that is good or not, but is linked to how Oracle works with OCI. Personally, I prefer to have normal DG configured to protect my databases and I choose where I want to open my pdb (maybe they add this feature in the future).

Another detail is that DG PDG (from now) works only in MaxPerformance mode, so, there is no SYNC mode for the archive destinations. There are more limitations for the DG PDB and you can check it in the topic DG PDB Configuration Restrictions from official documentation (I recommend that you read it).

Please read my new blog post about the new changes to the process. You can see how the process evolved and it is better. Read it here.

2020-2021

My first post of 2021 is just Thank You. Thanks for reading my posts and following me on my social media (Twitter/LinkedIn/Blog). Thanks for all of the 41.000 site access during the last year and for all that attended my sessions at the online events. I hope that was possible to help you with something about Oracle.

DB_UNIQUE_NAME, PDB, and Data Guard

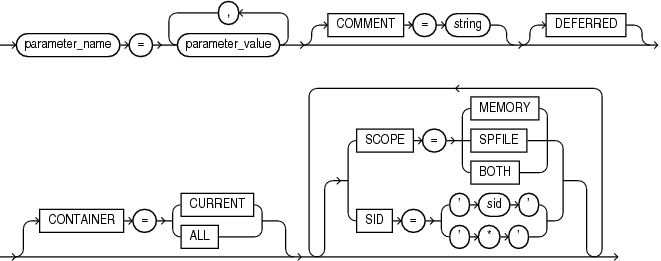

When you change the parameters for the database is possible to specify the db_unique_name and allow more control where you want to apply/use it. This is very useful to limit the scope, but you need to be aware of some collateral effects. Even not present at the official doc, you can use it. But check here some details that you need to take care of.

Exadata, Missing Metric

Understand metrics for Exadata Storage Server is important to understand how all the software features are being used and all the details from that. Here I will discuss one case where the FC_IO_BY_R_SEC metric can show not precise values. And I will discuss one missing metric that can save a lot.

If you have doubts about metrics, you can check my post about metrics, it was an introduction, but cover some aspects of how to read and use it. You can check my other post where I show how to use metric DB_FC_IO_BY_SEC to identify database problems that can be hidden when checking only from the database side.

Exadata, Understanding Metrics

Metrics for Exadata deliver to you one way to deeply see, and understand, what it is happening for Exadata Storage Server and Exadata Software. Understand it is fundamental to identify and solve problems that can be hidden (or even unsee) from the database side. In this post, I will explain details about these metrics and what you can do using them.

My last article about Exadata Storage Server metrics was about one example of how to use them to identify problems that do not appear in the database side. In that post, I showed how I used the metric DB_FC_IO_BY_SEC to identify bad queries.

The point for Exadata (that I made in that article), is that most of the time, Exadata is so powerful that bad statements are handled without a problem because of the features that exist (flashcache, smartio, and others). But another point is that usually, Exadata is a high consolidated environment, where you “consolidate” a lot of databases and it is normal that some of them have different workloads and needs. Using metrics can help you to do a fine tune of your environment, but besides that, it delivers to you one way to check and control everything that’s happening.

In this post, I will not explain each metric one by one, but guide you to understand metrics and some interesting and important details about them.

ZDLRA, Multi-site protection – ZERO RPO for Primary and Standby

ZDLRA can be used from a small single database environment to big environments where you need protection in more than one site at the same time. At every level, you can use different features of ZDLRA to provide desirable protection. Here I will show how to reach zero RPO for both primary and standby databases. All the steps, doc, and tech parts are covered.

You can check the examples the reference for every scenario int these two papers from the Oracle MAA team: MAA Overview On-Premises and Oracle MAA Reference Architectures. They provide good information on how to prepare to reduce RPO and improve RTO. In resume, the focus is the same, reduce the downtime and data loss in case of a catastrophe (zero RPO, and zero RPO).

Multi-site protection

If you looked both papers before, you saw that to provide good protection is desirable to have an additional site to, at least, send the backups. And if you go higher, for GOLD and PLATINUM environments, you start to have multiple sites synced with data guard. These Critical/Mission-critical environments need to be protected for every kind of catastrophic failure, from disk until complete site outage (some need to follow specific law’s requirements, bank as an example).

And the focus of this post is these big environments. I will show you how to use ZDLRA to protect both sites, reaching zero RPO even for standby databases. And doing that, you can survive for a catastrophic outage (like entire datacenter failure) and still have zero RPO. Going further, you can even have zero RPO if you lose completely on site when using real-time redo for ZDLRA, and this is not written in the docs by the way.