Calling the DBCA is easy and we have a lot of options, the option initParams allows us to send any parameter that we want during database creation. But for ASMCA? Can we send parameters during the creation of the ASM instance? The answer is Yes and let’s check how to easily do this during the Oracle Grid creation/installation.

But why?

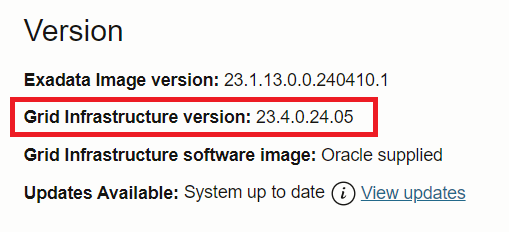

There are several occasions that we need to do that. One example is setting the parameter “_disk_sector_size_override” that disables the check for sector size at the O.S. level (allowing us to play with different sector sizes). The second is allowing us to install Oracle 23ai using the parameter “_exadata_feature_on=true” at the installation/creation phase (for lab and test purposes in a non-Engineered System environment while Oracle does not release it for On-Prem).

For dbca it is easy to do that, but for asmca? Today there was two options for Grid/ASM: The first is install Grid 19c, set the parameter “_exadata_feature_on=true”, and upgrade to 23ai (similar what I described here). The second is install Grid, call the root.sh, wait for the error, set the parameter, and resume/try again (Martin Klier described here how to do this).